Re: [PR] [FLINK-35444][pipeline-connector][paimon] Paimon Pipeline Connector support changing column names to lowercase for Hive metastore [flink-cdc]

kyzheng196 commented on code in PR #3569:

URL: https://github.com/apache/flink-cdc/pull/3569#discussion_r1730277217

##

flink-cdc-common/src/main/java/org/apache/flink/cdc/common/schema/Schema.java:

##

@@ -396,6 +397,9 @@ private void checkColumn(String columnName, DataType type) {

* @param columnNames columns that form a unique primary key

*/

public Builder primaryKey(String... columnNames) {

+for (int i = 0; i < columnNames.length; i++) {

+columnNames[i] = columnNames[i].toLowerCase();

Review Comment:

Hi @lvyanquan, thank you for your kind suggestions.

I've modified PaimonMetaDataApplier and PaimonMetadataApplierTest

accordingly, and keep common class unchanged as before. All test cases have

passed, hope this may meet your expectations, many thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Created] (FLINK-36150) tables.exclude is not valid if scan.binlog.newly-added-table.enabled is true.

Hongshun Wang created FLINK-36150: - Summary: tables.exclude is not valid if scan.binlog.newly-added-table.enabled is true. Key: FLINK-36150 URL: https://issues.apache.org/jira/browse/FLINK-36150 Project: Flink Issue Type: Improvement Components: Flink CDC Affects Versions: cdc-3.1.1 Reporter: Hongshun Wang Fix For: cdc-3.2.0 Current, `tables.exclude` is provided for user to exclude some table, because tables passed to DataSource will be filtered when MySqlDataSourceFactory creates DataSource. However, when scan.binlog.newly-added-table.enabled is true, new table ddl from binlog will be read and won't be filtered by `tables.exclude`. This Jira aims to pass `tables.exclude` to MySqlSourceConfig and will use it when find tables from mysql database. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-36150) tables.exclude is not valid if scan.binlog.newly-added-table.enabled is true.

[ https://issues.apache.org/jira/browse/FLINK-36150?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876487#comment-17876487 ] Hongshun Wang commented on FLINK-36150: --- I'd like to do it. > tables.exclude is not valid if scan.binlog.newly-added-table.enabled is true. > - > > Key: FLINK-36150 > URL: https://issues.apache.org/jira/browse/FLINK-36150 > Project: Flink > Issue Type: Improvement > Components: Flink CDC >Affects Versions: cdc-3.1.1 >Reporter: Hongshun Wang >Priority: Blocker > Fix For: cdc-3.2.0 > > > Current, `tables.exclude` is provided for user to exclude some table, because > tables passed to DataSource will be filtered when MySqlDataSourceFactory > creates DataSource. > However, when scan.binlog.newly-added-table.enabled is true, new table ddl > from binlog will be read and won't be filtered by `tables.exclude`. > > This Jira aims to pass `tables.exclude` to MySqlSourceConfig and will use it > when find tables from mysql database. > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Updated] (FLINK-36150) tables.exclude is not valid if scan.binlog.newly-added-table.enabled is true.

[ https://issues.apache.org/jira/browse/FLINK-36150?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-36150: --- Labels: pull-request-available (was: ) > tables.exclude is not valid if scan.binlog.newly-added-table.enabled is true. > - > > Key: FLINK-36150 > URL: https://issues.apache.org/jira/browse/FLINK-36150 > Project: Flink > Issue Type: Improvement > Components: Flink CDC >Affects Versions: cdc-3.1.1 >Reporter: Hongshun Wang >Priority: Blocker > Labels: pull-request-available > Fix For: cdc-3.2.0 > > > Current, `tables.exclude` is provided for user to exclude some table, because > tables passed to DataSource will be filtered when MySqlDataSourceFactory > creates DataSource. > However, when scan.binlog.newly-added-table.enabled is true, new table ddl > from binlog will be read and won't be filtered by `tables.exclude`. > > This Jira aims to pass `tables.exclude` to MySqlSourceConfig and will use it > when find tables from mysql database. > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[PR] [FLINK-36128] Promote LENIENT as the default schema change behavior [flink-cdc]

yuxiqian opened a new pull request, #3574: URL: https://github.com/apache/flink-cdc/pull/3574 This closes FLINK-36128. Currently, default schema evolution mode "EVOLVE" could not handle exceptions, and might not be able to restore from existing state correctly after failover. Before we can "trigger checkpoints on demand" which is not possible prior to in Flink 1.19, making "LENIENT" a default option might be more suitable. This PR also contains a hotfix patch for PrePartitionOperator broadcasting failure. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-36128) Promote LENIENT mode as the default schema evolution behavior

[ https://issues.apache.org/jira/browse/FLINK-36128?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-36128: --- Labels: pull-request-available (was: ) > Promote LENIENT mode as the default schema evolution behavior > - > > Key: FLINK-36128 > URL: https://issues.apache.org/jira/browse/FLINK-36128 > Project: Flink > Issue Type: Bug > Components: Flink CDC >Reporter: yux >Priority: Blocker > Labels: pull-request-available > > Currently, default schema evolution mode "EVOLVE" could not handle > exceptions, and might not be able to restore from existing state correctly > after failover. Before we can "manually trigger checkpoint" that was > introduced in Flink 1.19, making "LENIENT" a default option might be more > suitable. -- This message was sent by Atlassian Jira (v8.20.10#820010)

Re: [PR] [FLINK-36128] Promote LENIENT as the default schema change behavior [flink-cdc]

leonardBang commented on code in PR #3574:

URL: https://github.com/apache/flink-cdc/pull/3574#discussion_r1730301097

##

flink-cdc-cli/src/main/java/org/apache/flink/cdc/cli/parser/YamlPipelineDefinitionParser.java:

##

@@ -172,6 +183,23 @@ private SinkDef toSinkDef(JsonNode sinkNode) {

Optional.ofNullable(sinkNode.get(EXCLUDE_SCHEMA_EVOLUTION_TYPES))

.ifPresent(e -> e.forEach(tag ->

excludedSETypes.add(tag.asText(;

+if (includedSETypes.isEmpty()) {

+// If no schema evolution types are specified, include all schema

evolution types by

+// default.

+Arrays.stream(SchemaChangeEventTypeFamily.ALL)

+.map(SchemaChangeEventType::getTag)

+.forEach(includedSETypes::add);

+}

+

+if (excludedSETypes.isEmpty()

+&& SchemaChangeBehavior.LENIENT.equals(schemaChangeBehavior)) {

+// In lenient mode, we exclude DROP_TABLE and TRUNCATE_TABLE by

default. This could be

+// overridden by manually specifying excluded types.

Review Comment:

minor: we should add schema behavior document ASAP

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Re: [PR] [FLINK-36128] Promote LENIENT as the default schema change behavior [flink-cdc]

yuxiqian commented on code in PR #3574:

URL: https://github.com/apache/flink-cdc/pull/3574#discussion_r1730302627

##

flink-cdc-cli/src/main/java/org/apache/flink/cdc/cli/parser/YamlPipelineDefinitionParser.java:

##

@@ -172,6 +183,23 @@ private SinkDef toSinkDef(JsonNode sinkNode) {

Optional.ofNullable(sinkNode.get(EXCLUDE_SCHEMA_EVOLUTION_TYPES))

.ifPresent(e -> e.forEach(tag ->

excludedSETypes.add(tag.asText(;

+if (includedSETypes.isEmpty()) {

+// If no schema evolution types are specified, include all schema

evolution types by

+// default.

+Arrays.stream(SchemaChangeEventTypeFamily.ALL)

+.map(SchemaChangeEventType::getTag)

+.forEach(includedSETypes::add);

+}

+

+if (excludedSETypes.isEmpty()

+&& SchemaChangeBehavior.LENIENT.equals(schemaChangeBehavior)) {

+// In lenient mode, we exclude DROP_TABLE and TRUNCATE_TABLE by

default. This could be

+// overridden by manually specifying excluded types.

Review Comment:

Tracked with FLINK-36151.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Created] (FLINK-36151) Add documentations for Schema Evolution related options

yux created FLINK-36151: --- Summary: Add documentations for Schema Evolution related options Key: FLINK-36151 URL: https://issues.apache.org/jira/browse/FLINK-36151 Project: Flink Issue Type: Improvement Components: Flink CDC Reporter: yux CDC Documentations should be updated to reflect recent changes of schema change features, like new TRY_EVOLVE and LENIENT mode, `include.schema.change` and `exclude.schema.change` options. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-36145) Change JobSpec.flinkStateSnapshotReference to snapshotReference

[ https://issues.apache.org/jira/browse/FLINK-36145?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876505#comment-17876505 ] Mate Czagany commented on FLINK-36145: -- I think snapshotReference would work well. > Change JobSpec.flinkStateSnapshotReference to snapshotReference > --- > > Key: FLINK-36145 > URL: https://issues.apache.org/jira/browse/FLINK-36145 > Project: Flink > Issue Type: Sub-task > Components: Kubernetes Operator >Reporter: Gyula Fora >Priority: Blocker > Fix For: kubernetes-operator-1.10.0 > > > To avoid redundant / verbose naming we should change this field name in the > spec before it's released: > JobSpec.flinkStateSnapshotReference -> JobSpec.snapshotReference or > JobSpec.stateSnapshotReference -- This message was sent by Atlassian Jira (v8.20.10#820010)

[PR] [FLINK-36145][snapshot] Rename flinkStateSnapshotReference to snapshotReference [flink-kubernetes-operator]

mateczagany opened a new pull request, #870: URL: https://github.com/apache/flink-kubernetes-operator/pull/870 ## What is the purpose of the change Rename JobSpec.flinkStateSnapshotReference to JobSpec.snapshotReference. This should make less verbose and easier to memorize for users. flinkStateSnapshotReference field has not been released officially yet, so this change should not affect production users. ## Brief change log - Rename in Java, Markdown files and examples - Regenerate CRDs ## Verifying this change - Unit tests ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changes to the `CustomResourceDescriptors`: yes - Core observer or reconciler logic that is regularly executed: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? not applicable -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-36145) Change JobSpec.flinkStateSnapshotReference to snapshotReference

[ https://issues.apache.org/jira/browse/FLINK-36145?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-36145: --- Labels: pull-request-available (was: ) > Change JobSpec.flinkStateSnapshotReference to snapshotReference > --- > > Key: FLINK-36145 > URL: https://issues.apache.org/jira/browse/FLINK-36145 > Project: Flink > Issue Type: Sub-task > Components: Kubernetes Operator >Reporter: Gyula Fora >Priority: Blocker > Labels: pull-request-available > Fix For: kubernetes-operator-1.10.0 > > > To avoid redundant / verbose naming we should change this field name in the > spec before it's released: > JobSpec.flinkStateSnapshotReference -> JobSpec.snapshotReference or > JobSpec.stateSnapshotReference -- This message was sent by Atlassian Jira (v8.20.10#820010)

Re: [PR] [FLINK-35177] Fix DataGen Connector documentation [flink]

morozov commented on PR #24692: URL: https://github.com/apache/flink/pull/24692#issuecomment-2308895535 @GOODBOY008 done. FWIW, you can reference code blocks right on GitHub. This way, then are better readable and could be navigated to: https://github.com/apache/flink/blob/4faf0966766e3734792f80ed66e512aa3033cacd/flink-connectors/flink-connector-datagen/src/main/java/org/apache/flink/connector/datagen/source/DataGeneratorSource.java#L79-L86 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-36145) Change JobSpec.flinkStateSnapshotReference to snapshotReference

[ https://issues.apache.org/jira/browse/FLINK-36145?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876532#comment-17876532 ] Gyula Fora commented on FLINK-36145: [~mateczagany] let's hold off on this for a little bit, I would like to ask 1-2 people just to get it right. On a second thought, snapshotReference feels a little off. Previously we had initialSavepointPath which was pretty straightforward. Maybe initialStateReference is more descriptive. Or stateReference if we want shorter but that may be a bit misleading given the behaviour. [~thw] [~rmetzger] any thoughts? > Change JobSpec.flinkStateSnapshotReference to snapshotReference > --- > > Key: FLINK-36145 > URL: https://issues.apache.org/jira/browse/FLINK-36145 > Project: Flink > Issue Type: Sub-task > Components: Kubernetes Operator >Reporter: Gyula Fora >Priority: Blocker > Labels: pull-request-available > Fix For: kubernetes-operator-1.10.0 > > > To avoid redundant / verbose naming we should change this field name in the > spec before it's released: > JobSpec.flinkStateSnapshotReference -> JobSpec.snapshotReference or > JobSpec.stateSnapshotReference -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-36145) Change JobSpec.flinkStateSnapshotReference to snapshotReference

[ https://issues.apache.org/jira/browse/FLINK-36145?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876534#comment-17876534 ] Thomas Weise commented on FLINK-36145: -- `snapshotReference` sounds a bit misleading, `initialStateReference` is better. I think the "initial" part is actually important so it is clear that the reference only applies till next snapshot. > Change JobSpec.flinkStateSnapshotReference to snapshotReference > --- > > Key: FLINK-36145 > URL: https://issues.apache.org/jira/browse/FLINK-36145 > Project: Flink > Issue Type: Sub-task > Components: Kubernetes Operator >Reporter: Gyula Fora >Priority: Blocker > Labels: pull-request-available > Fix For: kubernetes-operator-1.10.0 > > > To avoid redundant / verbose naming we should change this field name in the > spec before it's released: > JobSpec.flinkStateSnapshotReference -> JobSpec.snapshotReference or > JobSpec.stateSnapshotReference -- This message was sent by Atlassian Jira (v8.20.10#820010)

Re: [PR] [FLINK-34555][table] Migrate JoinConditionTypeCoerceRule to java. [flink]

snuyanzin commented on code in PR #24420:

URL: https://github.com/apache/flink/pull/24420#discussion_r1730414834

##

flink-table/flink-table-planner/src/main/java/org/apache/flink/table/planner/plan/rules/logical/JoinConditionTypeCoerceRule.java:

##

@@ -0,0 +1,178 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.planner.plan.rules.logical;

+

+import org.apache.flink.table.api.TableException;

+import org.apache.flink.table.planner.plan.utils.FlinkRexUtil;

+

+import org.apache.calcite.plan.RelOptRuleCall;

+import org.apache.calcite.plan.RelOptUtil;

+import org.apache.calcite.plan.RelRule;

+import org.apache.calcite.rel.core.Join;

+import org.apache.calcite.rel.type.RelDataType;

+import org.apache.calcite.rel.type.RelDataTypeFactory;

+import org.apache.calcite.rex.RexBuilder;

+import org.apache.calcite.rex.RexCall;

+import org.apache.calcite.rex.RexInputRef;

+import org.apache.calcite.rex.RexNode;

+import org.apache.calcite.sql.SqlKind;

+import org.apache.calcite.sql.type.SqlTypeUtil;

+import org.apache.calcite.tools.RelBuilder;

+import org.immutables.value.Value;

+

+import java.util.Arrays;

+import java.util.List;

+import java.util.stream.Collectors;

+

+/**

+ * Planner rule that coerces the both sides of EQUALS(`=`) operator in Join

condition to the same

+ * type while sans nullability.

+ *

+ * For most cases, we already did the type coercion during type validation

by implicit type

+ * coercion or during sqlNode to relNode conversion, this rule just does a

rechecking to ensure a

+ * strongly uniform equals type, so that during a HashJoin shuffle we can have

the same hashcode of

+ * the same value.

+ */

+@Value.Enclosing

+public class JoinConditionTypeCoerceRule

+extends

RelRule {

+

+public static final JoinConditionTypeCoerceRule INSTANCE =

+

JoinConditionTypeCoerceRule.JoinConditionTypeCoerceRuleConfig.DEFAULT.toRule();

+

+private JoinConditionTypeCoerceRule(JoinConditionTypeCoerceRuleConfig

config) {

+super(config);

+}

+

+@Override

+public boolean matches(RelOptRuleCall call) {

+Join join = call.rel(0);

+if (join.getCondition().isAlwaysTrue()) {

+return false;

+}

+RelDataTypeFactory typeFactory = call.builder().getTypeFactory();

+return hasEqualsRefsOfDifferentTypes(typeFactory, join.getCondition());

+}

+

+@Override

+public void onMatch(RelOptRuleCall call) {

+Join join = call.rel(0);

+RelBuilder builder = call.builder();

+RexBuilder rexBuilder = builder.getRexBuilder();

+RelDataTypeFactory typeFactory = builder.getTypeFactory();

+

+List joinFilters =

RelOptUtil.conjunctions(join.getCondition());

+List newJoinFilters =

+joinFilters.stream()

+.map(

+filter -> {

+if (filter instanceof RexCall) {

+RexCall c = (RexCall) filter;

+if

(c.getKind().equals(SqlKind.EQUALS)) {

Review Comment:

```suggestion

if (c.getKind() == SqlKind.EQUALS) {

```

it's better to follow same approach in code

e.g. below it is compared with `==` which is preferred for enums

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Re: [PR] [FLINK-34505][table] Migrate WindowGroupReorderRule to java. [flink]

snuyanzin commented on PR #24375: URL: https://github.com/apache/flink/pull/24375#issuecomment-2308961960 still failing on ci -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35177] Fix DataGen Connector documentation [flink]

snuyanzin commented on PR #24692: URL: https://github.com/apache/flink/pull/24692#issuecomment-2308984427 if we are talking about examples there is already existing one in examples module which is quite close to the one from description https://github.com/apache/flink/blob/4faf0966766e3734792f80ed66e512aa3033cacd/flink-examples/flink-examples-streaming/src/main/java/org/apache/flink/streaming/examples/datagen/DataGenerator.java#L36-L43 how about having the same approach both in docs and in this example? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-34467] bump flink version to 1.20.0 [flink-connector-kafka]

HuangZhenQiu commented on PR #111: URL: https://github.com/apache/flink-connector-kafka/pull/111#issuecomment-2309026627 @AHeise Thanks for the reply. I totally understand the pain points of maintain multiple flink version compatibility for a connector. In each Flink release, there are always some new experimental interfaces in api or runtime introduced. Shall we consider the solution from Apache Hudi or Apache Iceberg? Both of them use a separate module for different flink versions. Some classes are replicated into different modules as needed. https://github.com/apache/hudi/tree/master/hudi-flink-datasource -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-36152) Traverse through the superclass hierarchy to extract generic type.

Xinglong Wang created FLINK-36152:

-

Summary: Traverse through the superclass hierarchy to extract

generic type.

Key: FLINK-36152

URL: https://issues.apache.org/jira/browse/FLINK-36152

Project: Flink

Issue Type: Bug

Components: Table SQL / Planner

Affects Versions: 1.16.1, 2.0.0

Reporter: Xinglong Wang

In our case, there's a `ConcreteLookupFunction extends AbstractLookupFunction`,

and `AbstractLookupFunction extends TableFunction`.

However `Class#getGenericSuperclass` only return the direct superclass, so it

cannot extract the correct generic type `RowData`.

I can reproduce the exception below:

{code:java}

//

flink-table/flink-table-planner/src/test/java/org/apache/flink/table/planner/runtime/stream/sql/FunctionITCase.java

@Test

void testLookupTableFunctionWithoutHintLevel2()

throws ExecutionException, InterruptedException {

testLookupTableFunctionBase(LookupTableWithoutHintLevel2Function.class.getName());

}

// ... ...

public static class LookupTableWithoutHintLevel2Function

extends LookupTableWithoutHintLevel1Function {}{code}

{code:java}

org.apache.flink.table.api.ValidationException: Cannot extract a data type from

an internal 'org.apache.flink.table.data.RowData' class without further

information. Please use annotations to define the full logical type.

at

org.apache.flink.table.types.extraction.ExtractionUtils.extractionError(ExtractionUtils.java:424)

at

org.apache.flink.table.types.extraction.ExtractionUtils.extractionError(ExtractionUtils.java:419)

at

org.apache.flink.table.types.extraction.DataTypeExtractor.checkForCommonErrors(DataTypeExtractor.java:425)

at

org.apache.flink.table.types.extraction.DataTypeExtractor.extractDataTypeOrError(DataTypeExtractor.java:330)

at

org.apache.flink.table.types.extraction.DataTypeExtractor.extractDataTypeOrRawWithTemplate(DataTypeExtractor.java:290)

... 53 more

{code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[PR] [FLINK-36152] Fix incorrect type extraction in case of cascaded inheritance when DataTypeHint is not configured [flink]

littleeleventhwolf opened a new pull request, #25251: URL: https://github.com/apache/flink/pull/25251 ## What is the purpose of the change *(For example: This pull request makes task deployment go through the blob server, rather than through RPC. That way we avoid re-transferring them on each deployment (during recovery).)* ## Brief change log *(for example:)* - *The TaskInfo is stored in the blob store on job creation time as a persistent artifact* - *Deployments RPC transmits only the blob storage reference* - *TaskManagers retrieve the TaskInfo from the blob cache* ## Verifying this change Please make sure both new and modified tests in this PR follow [the conventions for tests defined in our code quality guide](https://flink.apache.org/how-to-contribute/code-style-and-quality-common/#7-testing). *(Please pick either of the following options)* This change is a trivial rework / code cleanup without any test coverage. *(or)* This change is already covered by existing tests, such as *(please describe tests)*. *(or)* This change added tests and can be verified as follows: *(example:)* - *Added integration tests for end-to-end deployment with large payloads (100MB)* - *Extended integration test for recovery after master (JobManager) failure* - *Added test that validates that TaskInfo is transferred only once across recoveries* - *Manually verified the change by running a 4 node cluster with 2 JobManagers and 4 TaskManagers, a stateful streaming program, and killing one JobManager and two TaskManagers during the execution, verifying that recovery happens correctly.* ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes / no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (yes / no) - The serializers: (yes / no / don't know) - The runtime per-record code paths (performance sensitive): (yes / no / don't know) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: (yes / no / don't know) - The S3 file system connector: (yes / no / don't know) ## Documentation - Does this pull request introduce a new feature? (yes / no) - If yes, how is the feature documented? (not applicable / docs / JavaDocs / not documented) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-36152) Traverse through the superclass hierarchy to extract generic type.

[

https://issues.apache.org/jira/browse/FLINK-36152?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-36152:

---

Labels: pull-request-available (was: )

> Traverse through the superclass hierarchy to extract generic type.

> --

>

> Key: FLINK-36152

> URL: https://issues.apache.org/jira/browse/FLINK-36152

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 2.0.0, 1.16.1

>Reporter: Xinglong Wang

>Priority: Major

> Labels: pull-request-available

>

> In our case, there's a `ConcreteLookupFunction extends

> AbstractLookupFunction`, and `AbstractLookupFunction extends

> TableFunction`.

> However `Class#getGenericSuperclass` only return the direct superclass, so it

> cannot extract the correct generic type `RowData`.

> I can reproduce the exception below:

> {code:java}

> //

> flink-table/flink-table-planner/src/test/java/org/apache/flink/table/planner/runtime/stream/sql/FunctionITCase.java

> @Test

> void testLookupTableFunctionWithoutHintLevel2()

> throws ExecutionException, InterruptedException {

>

> testLookupTableFunctionBase(LookupTableWithoutHintLevel2Function.class.getName());

> }

> // ... ...

> public static class LookupTableWithoutHintLevel2Function

> extends LookupTableWithoutHintLevel1Function {}{code}

> {code:java}

> org.apache.flink.table.api.ValidationException: Cannot extract a data type

> from an internal 'org.apache.flink.table.data.RowData' class without further

> information. Please use annotations to define the full logical type.

> at

> org.apache.flink.table.types.extraction.ExtractionUtils.extractionError(ExtractionUtils.java:424)

> at

> org.apache.flink.table.types.extraction.ExtractionUtils.extractionError(ExtractionUtils.java:419)

> at

> org.apache.flink.table.types.extraction.DataTypeExtractor.checkForCommonErrors(DataTypeExtractor.java:425)

> at

> org.apache.flink.table.types.extraction.DataTypeExtractor.extractDataTypeOrError(DataTypeExtractor.java:330)

> at

> org.apache.flink.table.types.extraction.DataTypeExtractor.extractDataTypeOrRawWithTemplate(DataTypeExtractor.java:290)

> ... 53 more

> {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

Re: [PR] [FLINK-36152] Fix incorrect type extraction in case of cascaded inheritance when DataTypeHint is not configured [flink]

flinkbot commented on PR #25251: URL: https://github.com/apache/flink/pull/25251#issuecomment-2309047657 ## CI report: * 3f1b92e8d262a3a601624ade2b17bf126f553117 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35589][cdc-common] Support MemorySize type in FlinkCDC ConfigOptions. [flink-cdc]

github-actions[bot] commented on PR #3437: URL: https://github.com/apache/flink-cdc/pull/3437#issuecomment-2309056140 This pull request has been automatically marked as stale because it has not had recent activity for 60 days. It will be closed in 30 days if no further activity occurs. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-35589) Support MemorySize type in FlinkCDC ConfigOptions

[ https://issues.apache.org/jira/browse/FLINK-35589?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-35589: --- Labels: pull-request-available (was: ) > Support MemorySize type in FlinkCDC ConfigOptions > -- > > Key: FLINK-35589 > URL: https://issues.apache.org/jira/browse/FLINK-35589 > Project: Flink > Issue Type: Improvement > Components: Flink CDC >Affects Versions: cdc-3.2.0 >Reporter: LvYanquan >Priority: Not a Priority > Labels: pull-request-available > Fix For: cdc-3.2.0 > > > This allow user to set MemorySize config type like Flink. > -- This message was sent by Atlassian Jira (v8.20.10#820010)

Re: [PR] [FLINK-34467] bump flink version to 1.20.0 [flink-connector-kafka]

HuangZhenQiu commented on PR #111: URL: https://github.com/apache/flink-connector-kafka/pull/111#issuecomment-2309083950 I think we can drop support for flink 1.17 and flink 1.18 first in this PR https://github.com/apache/flink-connector-kafka/pull/102. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[PR] [FLINK-36151] Add schema evolution related docs [flink-cdc]

yuxiqian opened a new pull request, #3575: URL: https://github.com/apache/flink-cdc/pull/3575 This closes FLINK-36151 by adding missing schema evolution related concepts into Flink CDC docs. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-36151) Add documentations for Schema Evolution related options

[ https://issues.apache.org/jira/browse/FLINK-36151?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-36151: --- Labels: pull-request-available (was: ) > Add documentations for Schema Evolution related options > --- > > Key: FLINK-36151 > URL: https://issues.apache.org/jira/browse/FLINK-36151 > Project: Flink > Issue Type: Improvement > Components: Flink CDC >Reporter: yux >Priority: Major > Labels: pull-request-available > > CDC Documentations should be updated to reflect recent changes of schema > change features, like new TRY_EVOLVE and LENIENT mode, > `include.schema.change` and `exclude.schema.change` options. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Created] (FLINK-36153) MySQL fails to handle schema change events In Timestamp or Earliest Offset startup mode

yux created FLINK-36153: --- Summary: MySQL fails to handle schema change events In Timestamp or Earliest Offset startup mode Key: FLINK-36153 URL: https://issues.apache.org/jira/browse/FLINK-36153 Project: Flink Issue Type: Improvement Components: Flink CDC Reporter: yux Currently, if MySQL source is trying to startup fro a binlog position where there are schema changes within range, job will fail due to non-replayable schema change events. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-36153) MySQL fails to handle schema change events In Timestamp or Earliest Offset startup mode

[ https://issues.apache.org/jira/browse/FLINK-36153?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876559#comment-17876559 ] yux commented on FLINK-36153: - [~Leonard] Please assign this to me. > MySQL fails to handle schema change events In Timestamp or Earliest Offset > startup mode > --- > > Key: FLINK-36153 > URL: https://issues.apache.org/jira/browse/FLINK-36153 > Project: Flink > Issue Type: Improvement > Components: Flink CDC >Reporter: yux >Priority: Major > > Currently, if MySQL source is trying to startup fro a binlog position where > there are schema changes within range, job will fail due to non-replayable > schema change events. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Updated] (FLINK-36153) MySQL fails to handle schema change events In Timestamp or Earliest Offset startup mode

[ https://issues.apache.org/jira/browse/FLINK-36153?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] yux updated FLINK-36153: Issue Type: Bug (was: Improvement) > MySQL fails to handle schema change events In Timestamp or Earliest Offset > startup mode > --- > > Key: FLINK-36153 > URL: https://issues.apache.org/jira/browse/FLINK-36153 > Project: Flink > Issue Type: Bug > Components: Flink CDC >Reporter: yux >Priority: Major > > Currently, if MySQL source is trying to startup fro a binlog position where > there are schema changes within range, job will fail due to non-replayable > schema change events. -- This message was sent by Atlassian Jira (v8.20.10#820010)

Re: [PR] [FLINK-36142][table] Remove TestTableSourceWithTime and its subclasses to prepare removing TableEnvironmentInternal#registerTableSourceInternal [flink]

xuyangzhong commented on PR #25243: URL: https://github.com/apache/flink/pull/25243#issuecomment-2309129941 @flinkbot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35177] Fix DataGen Connector documentation [flink]

morozov commented on PR #24692: URL: https://github.com/apache/flink/pull/24692#issuecomment-2309145899 > how about having the same approach both in docs and in this example? @snuyanzin what exactly do you propose? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-36131) FlinkSQL upgraded from 1.13.1 to 1.18.1 metrics does not display data

[ https://issues.apache.org/jira/browse/FLINK-36131?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876564#comment-17876564 ] mumu commented on FLINK-36131: -- [~hackergin] I am using Flink-sql-connector-kafka-3.0.2. jar. > FlinkSQL upgraded from 1.13.1 to 1.18.1 metrics does not display data > - > > Key: FLINK-36131 > URL: https://issues.apache.org/jira/browse/FLINK-36131 > Project: Flink > Issue Type: Bug >Affects Versions: 1.18.1 >Reporter: mumu >Priority: Major > Attachments: image-2024-08-22-17-33-21-336.png, > image-2024-08-23-16-42-27-450.png, 截屏2024-08-24 14.01.51.png > > > FlinkSQL upgraded from 1.13.1 to 1.18.1 metrics does not display data. > 0.Source___call[1].KafkaSourceReader.topic.xxxll_agg.partition.1.currentOffset > I can confirm that there was data available before the upgrade. > !image-2024-08-22-17-33-21-336.png! -- This message was sent by Atlassian Jira (v8.20.10#820010)

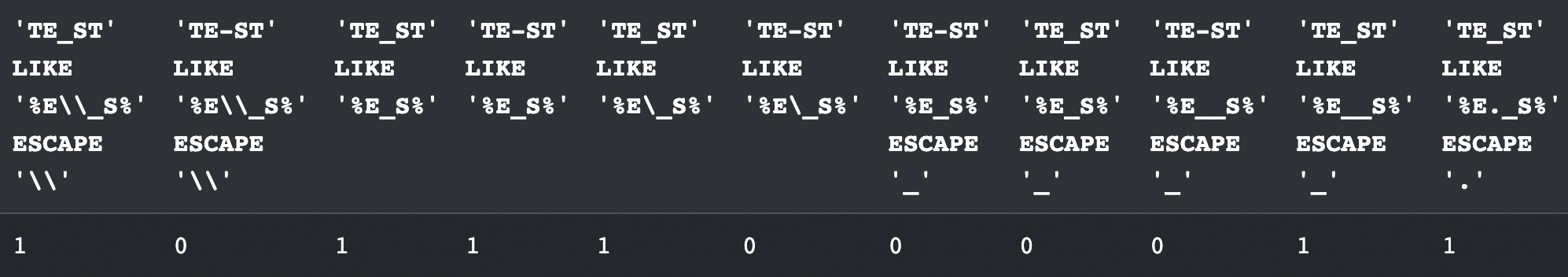

[jira] [Assigned] (FLINK-36100) Support ESCAPE in built-in function LIKE formally

[

https://issues.apache.org/jira/browse/FLINK-36100?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

lincoln lee reassigned FLINK-36100:

---

Assignee: Dylan He

> Support ESCAPE in built-in function LIKE formally

> -

>

> Key: FLINK-36100

> URL: https://issues.apache.org/jira/browse/FLINK-36100

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Reporter: Dylan He

>Assignee: Dylan He

>Priority: Major

> Labels: pull-request-available

>

> Flink does not formally support ESCAPE in built-in function LIKE, but in some

> cases we do need it because '_' and '%' are interpreted as special wildcard

> characters, preventing their use in their literal sense.

> And currently, if we forcefully use ESCAPE characters, we will get unexpected

> results like the cases below.

> {code:SQL}

> > SELECT 'TE_ST' LIKE '%E\_S%';

> FALSE

> > SELECT 'TE_ST' LIKE '%E\_S%' ESCAPE '\';

> ERROR

> {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Comment Edited] (FLINK-36141) [mysql]Set the scanNewlyAddedTableEnabled is true, but does not support automatic capture new added table

[ https://issues.apache.org/jira/browse/FLINK-36141?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876218#comment-17876218 ] zhongyuandong edited comment on FLINK-36141 at 8/26/24 2:28 AM: [~kunni] Is flink-cdc 3.3.0 only supported? We are using flink-cdc-source-connectors 3.0.1, Switching to flinks-CDC-pipelin-connectors was a pretty big adjustment for us, how can I use this feature? When will it be used? was (Author: JIRAUSER306741): [~kunni] Is flink-cdc 3.4.0 only supported? We are using flink-cdc-source-connectors 3.0.1, how can I use this feature? When will it be used? > [mysql]Set the scanNewlyAddedTableEnabled is true, but does not support > automatic capture new added table > - > > Key: FLINK-36141 > URL: https://issues.apache.org/jira/browse/FLINK-36141 > Project: Flink > Issue Type: Bug > Components: Flink CDC >Affects Versions: cdc-3.1.0 >Reporter: zhongyuandong >Priority: Critical > Attachments: image-2024-08-23-11-07-26-384.png, > image-2024-08-23-11-07-38-182.png, image-2024-08-23-11-08-31-324.png, > image-2024-08-23-11-08-44-156.png > > > 1、 see the flink-cdc3.0.1 debezium reader stage, specially added > scanNewlyAddedTableEnabled is true filtered out, so lead to be inaccessible > to the new table without restart? Based on 3.0.1 version design, testing the > scanNewlyAddedTableEnabled = false (lost manually add table, restart > operations cannot identify to), but it can automatically capture new added > table with regular expressions without restarting them, is this a bug or > purposely so design? (Why is it designed this way?) > 2、 flink-cdc2.3.0 without this logic, can support the capture new added > table. TODO: there is still very little chance that we can't capture new > added table. Is this the reason why flink-cdc2.4.0 and later versions no > longer support dynamic capture new added table? > 3. Which version solves this problem? How to solve it? > !image-2024-08-23-11-08-31-324.png! > !image-2024-08-23-11-08-44-156.png! -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Assigned] (FLINK-36153) MySQL fails to handle schema change events In Timestamp or Earliest Offset startup mode

[ https://issues.apache.org/jira/browse/FLINK-36153?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Leonard Xu reassigned FLINK-36153: -- Assignee: yux > MySQL fails to handle schema change events In Timestamp or Earliest Offset > startup mode > --- > > Key: FLINK-36153 > URL: https://issues.apache.org/jira/browse/FLINK-36153 > Project: Flink > Issue Type: Bug > Components: Flink CDC >Reporter: yux >Assignee: yux >Priority: Major > > Currently, if MySQL source is trying to startup fro a binlog position where > there are schema changes within range, job will fail due to non-replayable > schema change events. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-36005) Specify to get part of the potgresql field

[ https://issues.apache.org/jira/browse/FLINK-36005?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876569#comment-17876569 ] 宇宙先生 commented on FLINK-36005: -- who focus on the issu? thanks,I think it is a valueable iuuse. Thanks. > Specify to get part of the potgresql field > --- > > Key: FLINK-36005 > URL: https://issues.apache.org/jira/browse/FLINK-36005 > Project: Flink > Issue Type: Bug > Components: Flink CDC >Affects Versions: cdc-3.1.1 >Reporter: 宇宙先生 >Priority: Critical > Attachments: image-2024-08-08-14-39-10-566.png > > > when I use finkCDC to ingesting data from postgresql,and I only can obtain > some fileds not all table fileds,because of privileges. There are some fields > that I can't have permission to see,some I can have. But it encounters error > that privileges errors. when I debug the flikcdc program ,I found that the > initialization phase requires permissions for the whole table, and I guess > this is an area that can be optimized, please consider it. > !image-2024-08-08-14-39-10-566.png! > many thanks. -- This message was sent by Atlassian Jira (v8.20.10#820010)

Re: [PR] [FLINK-36100][table] Support ESCAPE in built-in function LIKE [flink]

lincoln-lil commented on code in PR #25225:

URL: https://github.com/apache/flink/pull/25225#discussion_r1730585595

##

flink-table/flink-table-planner/src/test/scala/org/apache/flink/table/planner/expressions/ScalarFunctionsTest.scala:

##

@@ -387,55 +387,91 @@ class ScalarFunctionsTest extends ScalarTypesTestBase {

@Test

def testLike(): Unit = {

+// true

testAllApis('f0.like("Th_s%"), "f0 LIKE 'Th_s%'", "TRUE")

-

testAllApis('f0.like("%is a%"), "f0 LIKE '%is a%'", "TRUE")

-

-testSqlApi("'abcxxxdef' LIKE 'abcx%'", "TRUE")

-testSqlApi("'abcxxxdef' LIKE '%%def'", "TRUE")

-testSqlApi("'abcxxxdef' LIKE 'abcxxxdef'", "TRUE")

-testSqlApi("'abcxxxdef' LIKE '%xdef'", "TRUE")

-testSqlApi("'abcxxxdef' LIKE 'abc%def%'", "TRUE")

-testSqlApi("'abcxxxdef' LIKE '%abc%def'", "TRUE")

-testSqlApi("'abcxxxdef' LIKE '%abc%def%'", "TRUE")

-testSqlApi("'abcxxxdef' LIKE 'abc%def'", "TRUE")

+testAllApis("abcxxxdef".like("abcx%"), "'abcxxxdef' LIKE 'abcx%'", "TRUE")

+testAllApis("abcxxxdef".like("%%def"), "'abcxxxdef' LIKE '%%def'", "TRUE")

+testAllApis("abcxxxdef".like("abcxxxdef"), "'abcxxxdef' LIKE 'abcxxxdef'",

"TRUE")

+testAllApis("abcxxxdef".like("%xdef"), "'abcxxxdef' LIKE '%xdef'", "TRUE")

+testAllApis("abcxxxdef".like("abc%def%"), "'abcxxxdef' LIKE 'abc%def%'",

"TRUE")

+testAllApis("abcxxxdef".like("%abc%def"), "'abcxxxdef' LIKE '%abc%def'",

"TRUE")

+testAllApis("abcxxxdef".like("%abc%def%"), "'abcxxxdef' LIKE '%abc%def%'",

"TRUE")

+testAllApis("abcxxxdef".like("abc%def"), "'abcxxxdef' LIKE 'abc%def'",

"TRUE")

// false

-testSqlApi("'abcxxxdef' LIKE 'abdxxxdef'", "FALSE")

-testSqlApi("'abcxxxdef' LIKE '%xqef'", "FALSE")

-testSqlApi("'abcxxxdef' LIKE 'abc%qef%'", "FALSE")

-testSqlApi("'abcxxxdef' LIKE '%abc%qef'", "FALSE")

-testSqlApi("'abcxxxdef' LIKE '%abc%qef%'", "FALSE")

-testSqlApi("'abcxxxdef' LIKE 'abc%qef'", "FALSE")

+testAllApis("abcxxxdef".like("abdxxxdef"), "'abcxxxdef' LIKE 'abdxxxdef'",

"FALSE")

+testAllApis("abcxxxdef".like("%xqef"), "'abcxxxdef' LIKE '%xqef'", "FALSE")

+testAllApis("abcxxxdef".like("abc%qef%"), "'abcxxxdef' LIKE 'abc%qef%'",

"FALSE")

+testAllApis("abcxxxdef".like("%abc%qef"), "'abcxxxdef' LIKE '%abc%qef'",

"FALSE")

+testAllApis("abcxxxdef".like("%abc%qef%"), "'abcxxxdef' LIKE '%abc%qef%'",

"FALSE")

+testAllApis("abcxxxdef".like("abc%qef"), "'abcxxxdef' LIKE 'abc%qef'",

"FALSE")

+

+// reported in FLINK-36100

+testAllApis("TE_ST".like("%E_S%"), "'TE_ST' LIKE '%E_S%'", "TRUE")

+testAllApis("TE-ST".like("%E_S%"), "'TE-ST' LIKE '%E_S%'", "TRUE")

+testAllApis("TE_ST".like("%E\\_S%"), "'TE_ST' LIKE '%E\\_S%'", "TRUE")

+testAllApis("TE-ST".like("%E\\_S%"), "'TE-ST' LIKE '%E\\_S%'", "FALSE")

}

@Test

def testNotLike(): Unit = {

testAllApis(!'f0.like("Th_s%"), "f0 NOT LIKE 'Th_s%'", "FALSE")

-

testAllApis(!'f0.like("%is a%"), "f0 NOT LIKE '%is a%'", "FALSE")

+

+// reported in FLINK-36100

+testSqlApi("'TE_ST' NOT LIKE '%E_S%'", "FALSE")

+testSqlApi("'TE-ST' NOT LIKE '%E_S%'", "FALSE")

+testSqlApi("'TE_ST' NOT LIKE '%E\\_S%'", "FALSE")

+testSqlApi("'TE-ST' NOT LIKE '%E\\_S%'", "TRUE")

}

@Test

def testLikeWithEscape(): Unit = {

Review Comment:

Add cases with invalid escape chars

##

flink-table/flink-table-api-java/src/main/java/org/apache/flink/table/functions/SqlLikeUtils.java:

##

@@ -108,15 +108,18 @@ static String sqlToRegexLike(String sqlPattern, char

escapeChar) {

final StringBuilder javaPattern = new StringBuilder(len + len);

for (i = 0; i < len; i++) {

char c = sqlPattern.charAt(i);

-if (JAVA_REGEX_SPECIALS.indexOf(c) >= 0) {

-javaPattern.append('\\');

-}

if (c == escapeChar) {

if (i == (sqlPattern.length() - 1)) {

throw invalidEscapeSequence(sqlPattern, i);

}

char nextChar = sqlPattern.charAt(i + 1);

-if ((nextChar == '_') || (nextChar == '%') || (nextChar ==

escapeChar)) {

+if ((nextChar == '_') || (nextChar == '%')) {

Review Comment:

We'd better also add some tests into `FlinkSqlLikeUtilsTest` to cover these

changes.

##

docs/data/sql_functions.yml:

##

@@ -49,10 +49,10 @@ comparison:

- sql: value1 NOT BETWEEN [ ASYMMETRIC | SYMMETRIC ] value2 AND value3

description: By default (or with the ASYMMETRIC keyword), returns TRUE if

value1 is less than value2 or greater than value3. With the SYMMETRIC keyword,

returns TRUE if value1 is not inclusively between value2 and value3. When

either value2 or value3 is NULL, returns TRUE or UNKNOWN. E.g., 12 NOT BETWEEN

15 AND 12 returns TRUE; 12 NOT BETWEEN SYMMETRIC 15 AND 12 returns FALSE; 12

NOT BETWEEN NULL AND 15 returns UNKNOWN; 12 NOT BETWEEN 15 AND NULL returns

TRUE; 12 NO

Re: [PR] [FLINK-36100][table] Support ESCAPE in built-in function LIKE [flink]

lincoln-lil commented on PR #25225: URL: https://github.com/apache/flink/pull/25225#issuecomment-2309221648 Also verified the result with escaping characters, all results with supported patterns are consistent with mysql:  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-26943][table] Add the built-in function DATE_ADD [flink]

dylanhz commented on code in PR #24988: URL: https://github.com/apache/flink/pull/24988#discussion_r1730611766 ## docs/data/sql_functions.yml: ## @@ -653,6 +653,16 @@ temporal: CURRENT_WATERMARK(ts) IS NULL OR ts > CURRENT_WATERMARK(ts) ``` + - sql: DATE_ADD(startDate, numDays) +table: startDate.dateAdd(numDays) +description: | + Returns the date numDays after startDate. + If numDays is negative, -numDays are subtracted from startDate. + + `startDate , numDays ` + + Returns a `DATE`, `NULL` if any of the arguments are `NULL` or result overflows or date string invalid. Review Comment: It seems both of them are acceptable. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[PR] [FLINK-26944][table] Add the built-in function ADD_MONTHS [flink]

dylanhz opened a new pull request, #25252:

URL: https://github.com/apache/flink/pull/25252

## What is the purpose of the change

Add the built-in function ADD_MONTHS.

Examples:

```SQL

> SELECT ADD_MONTHS('2016-08-31', 1);

2016-09-30

> SELECT ADD_MONTHS('2016-08-31', -6);

2016-02-29

```

## Brief change log

[FLINK-26944](https://issues.apache.org/jira/browse/FLINK-26944)

## Verifying this change

`TimeFunctionsITCase#addMonthsTestCases`

## Does this pull request potentially affect one of the following parts:

- Dependencies (does it add or upgrade a dependency): no

- The public API, i.e., is any changed class annotated with

`@Public(Evolving)`: yes

- The serializers: no

- The runtime per-record code paths (performance sensitive): no

- Anything that affects deployment or recovery: JobManager (and its

components), Checkpointing, Kubernetes/Yarn, ZooKeeper: no

- The S3 file system connector: no

## Documentation

- Does this pull request introduce a new feature? yes

- If yes, how is the feature documented? docs

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[PR] [FLINK-35579] update frocksdb version to v8.10.0 [flink]

mayuehappy opened a new pull request, #25253: URL: https://github.com/apache/flink/pull/25253 ## What is the purpose of the change *(For example: This pull request makes task deployment go through the blob server, rather than through RPC. That way we avoid re-transferring them on each deployment (during recovery).)* ## Brief change log *(for example:)* - *The TaskInfo is stored in the blob store on job creation time as a persistent artifact* - *Deployments RPC transmits only the blob storage reference* - *TaskManagers retrieve the TaskInfo from the blob cache* ## Verifying this change Please make sure both new and modified tests in this PR follow [the conventions for tests defined in our code quality guide](https://flink.apache.org/how-to-contribute/code-style-and-quality-common/#7-testing). *(Please pick either of the following options)* This change is a trivial rework / code cleanup without any test coverage. *(or)* This change is already covered by existing tests, such as *(please describe tests)*. *(or)* This change added tests and can be verified as follows: *(example:)* - *Added integration tests for end-to-end deployment with large payloads (100MB)* - *Extended integration test for recovery after master (JobManager) failure* - *Added test that validates that TaskInfo is transferred only once across recoveries* - *Manually verified the change by running a 4 node cluster with 2 JobManagers and 4 TaskManagers, a stateful streaming program, and killing one JobManager and two TaskManagers during the execution, verifying that recovery happens correctly.* ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes / no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (yes / no) - The serializers: (yes / no / don't know) - The runtime per-record code paths (performance sensitive): (yes / no / don't know) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: (yes / no / don't know) - The S3 file system connector: (yes / no / don't know) ## Documentation - Does this pull request introduce a new feature? (yes / no) - If yes, how is the feature documented? (not applicable / docs / JavaDocs / not documented) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-35579) Update the FrocksDB version in FLINK

[ https://issues.apache.org/jira/browse/FLINK-35579?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-35579: --- Labels: pull-request-available (was: ) > Update the FrocksDB version in FLINK > > > Key: FLINK-35579 > URL: https://issues.apache.org/jira/browse/FLINK-35579 > Project: Flink > Issue Type: Sub-task > Components: Runtime / State Backends >Affects Versions: 2.0.0 >Reporter: Yue Ma >Priority: Major > Labels: pull-request-available > Fix For: 2.0.0 > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

Re: [PR] [FLINK-26944][table] Add the built-in function ADD_MONTHS [flink]

flinkbot commented on PR #25252: URL: https://github.com/apache/flink/pull/25252#issuecomment-2309230928 ## CI report: * 47ce8c16deeb3c373c47d6c99edb8dce50723759 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-36039][autoscaler] Support clean historical event handler records in JDBC event handler [flink-kubernetes-operator]

1996fanrui merged PR #865: URL: https://github.com/apache/flink-kubernetes-operator/pull/865 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (FLINK-36039) Support clean historical event handler records in JDBC event handler

[

https://issues.apache.org/jira/browse/FLINK-36039?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Rui Fan resolved FLINK-36039.

-

Fix Version/s: kubernetes-operator-1.10.0

Resolution: Fixed

Merged to main(1.10.0) via: 6a426b2ff60331b89d67371279f400f8761bf1f3

> Support clean historical event handler records in JDBC event handler

>

>

> Key: FLINK-36039

> URL: https://issues.apache.org/jira/browse/FLINK-36039

> Project: Flink

> Issue Type: Improvement

> Components: Autoscaler

>Reporter: RocMarshal

>Assignee: RocMarshal

>Priority: Minor

> Labels: pull-request-available

> Fix For: kubernetes-operator-1.10.0

>

>

> Currently, the autoscaler generates a large amount of historical data for

> event handlers. As the system runs for a long time, the volume of historical

> data will continue to grow. It is necessary to support automatic cleanup of

> data within a fixed period.

> Based on the creation time timestamp, the following approach for cleaning up

> historical data might be a way:

> * Introduce the parameter {{autoscaler.standalone.jdbc-event-handler.ttl}}

> *

> ** Type: Duration

> ** Default value: 90 days

> ** Setting it to 0 means disabling the cleanup functionality.

> * In the {{JdbcAutoScalerEventHandler}} constructor, introduce a scheduled

> job. Also, add an internal interface method {{close}} for

> {{AutoScalerEventHandler & JobAutoScaler}} to stop and clean up related

> logic.

> * Cleanup logic:

> #

> ## Query the messages with {{create_time}} less than {{(currentTime - ttl)}}

> and find the maximum {{maxId}} in this collection.

> ## Delete 4096 messages at a time from the collection with IDs less than

> {{{}maxId{}}}.

> ## Wait 10 ms between each deletion until the cleanup is complete.

> ## Scan and delete expired data daily

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

Re: [PR] [FLINK-35579] update frocksdb version to v8.10.0 [flink]

flinkbot commented on PR #25253: URL: https://github.com/apache/flink/pull/25253#issuecomment-2309236263 ## CI report: * df3cd8e4998d6289622c426e94c52023e15b75a8 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-36117] Implement AsyncKeyedStateBackend for RocksDBKeyedStateBackend and HeapKeyedStateBackend [flink]

Zakelly commented on code in PR #25233:

URL: https://github.com/apache/flink/pull/25233#discussion_r1730658801

##

flink-runtime/src/main/java/org/apache/flink/runtime/state/v2/ValueStateWrapper.java:

##

@@ -0,0 +1,87 @@

+//

+// Source code recreated from a .class file by IntelliJ IDEA

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.state.v2;

+

+import org.apache.flink.api.common.state.v2.StateFuture;

+import org.apache.flink.api.common.state.v2.ValueState;

+import org.apache.flink.core.state.StateFutureImpl;

+

+import java.io.IOException;

+

+public class ValueStateWrapper implements ValueState {

Review Comment:

How about moving this under package `o.a.f.r.s.v2.adaptor`?

##

flink-runtime/src/main/java/org/apache/flink/runtime/state/heap/HeapKeyedStateBackend.java:

##

@@ -475,6 +482,32 @@ public LocalRecoveryConfig getLocalRecoveryConfig() {

return localRecoveryConfig;

}

+@Override

+public void setup(@Nonnull StateRequestHandler stateRequestHandler) {}

+

+@Nonnull

+@Override

+public S

createState(

+@Nonnull N defaultNamespace,

+@Nonnull TypeSerializer namespaceSerializer,

+@Nonnull org.apache.flink.runtime.state.v2.StateDescriptor

stateDesc)

+throws Exception {

+StateDescriptorTransformer transformer = new

StateDescriptorTransformer();

+StateDescriptor stateDescV1 =

transformer.getStateDescriptor(stateDesc);

+State state = getOrCreateKeyedState(namespaceSerializer, stateDescV1);

+if (stateDescV1.getType() == StateDescriptor.Type.VALUE) {

+return (S) new ValueStateWrapper((ValueState) state);

+}

+throw new UnsupportedOperationException(

+String.format("Unsupported state type: %s",

stateDesc.getType()));

+}

+

+@Nonnull

+@Override

+public StateExecutor createStateExecutor() {

+return null;

+}

+

Review Comment:

I suggest move this part to a wrapper/adaptor converting a

`KeyedStateBackend` to `AsyncKeyedStateBackend`. The adaptor should exist in

`v2.adaptor` package. And we could wrap `KeyedStateBackend` instance in

`StreamTaskStateInitializerImpl#streamOperatorStateContext`, WDYT?

##

flink-runtime/src/main/java/org/apache/flink/runtime/state/v2/StateDescriptorTransformer.java:

##

@@ -0,0 +1,51 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.state.v2;

+

+public class StateDescriptorTransformer {

+public org.apache.flink.api.common.state.StateDescriptor

getStateDescriptor(

Review Comment:

I'd suggest a util class and static util function for this.

##

flink-runtime/src/main/java/org/apache/flink/runtime/state/v2/ValueStateWrapper.java:

##

@@ -0,0 +1,87 @@

+//

+// Source code recreated from a .class file by IntelliJ IDEA

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable

[PR] [FLINK-35850][table] Add the built-in function DATEDIFF [flink]

dylanhz opened a new pull request, #25254:

URL: https://github.com/apache/flink/pull/25254

## What is the purpose of the change

Add the built-in function DATEDIFF.

Examples:

```SQL

> SELECT DATEDIFF('2009-07-31', '2009-07-30');

1

> SELECT DATEDIFF('2009-07-30', '2009-07-31');

-1

```

## Brief change log

[FLINK-35850](https://issues.apache.org/jira/browse/FLINK-35850)

## Verifying this change

`TimeFunctionsITCase#datediffTestCases`

## Does this pull request potentially affect one of the following parts:

- Dependencies (does it add or upgrade a dependency): no

- The public API, i.e., is any changed class annotated with

`@Public(Evolving)`: yes

- The serializers: no

- The runtime per-record code paths (performance sensitive): no

- Anything that affects deployment or recovery: JobManager (and its

components), Checkpointing, Kubernetes/Yarn, ZooKeeper: no

- The S3 file system connector: no

## Documentation

- Does this pull request introduce a new feature? yes

- If yes, how is the feature documented? docs

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (FLINK-35850) Add DATEDIFF function

[

https://issues.apache.org/jira/browse/FLINK-35850?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-35850:

---

Labels: pull-request-available (was: )

> Add DATEDIFF function

> -

>

> Key: FLINK-35850

> URL: https://issues.apache.org/jira/browse/FLINK-35850

> Project: Flink

> Issue Type: Sub-task

> Components: Table SQL / API

>Reporter: Dylan He

>Assignee: Dylan He

>Priority: Major

> Labels: pull-request-available

>

> Add DATEDIFF function as the same in Hive & Spark & MySQL, this function is

> similar to DATE_ADD and DATE_SUB, whose time interval unit is fixed to day

> compared with TIMESTAMPDIFF.

>

> Returns the number of days from {{startDate}} to {{{}endDate{}}}, the time

> parts of the values are omitted.

> Syntax:

> {code:sql}

> DATEDIFF(endDate, startDate)

> {code}

> Arguments:

> * {{{}endDate{}}}: A DATE expression.

> * {{{}startDate{}}}: A DATE expression.

> Returns:

> An INTEGER.

> See also:

> *

> [Hive|https://cwiki.apache.org/confluence/display/Hive/Hive+UDFs#HiveUDFs-DateFunctions]

> *

> [Spark|https://spark.apache.org/docs/3.5.1/sql-ref-functions-builtin.html#string-functions]

> *

> [Databricks|https://docs.databricks.com/en/sql/language-manual/functions/datediff.html]

> *

> [MySQL|https://dev.mysql.com/doc/refman/8.4/en/date-and-time-functions.html#function_datediff]

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

Re: [PR] [FLINK-35850][table] Add the built-in function DATEDIFF [flink]

flinkbot commented on PR #25254: URL: https://github.com/apache/flink/pull/25254#issuecomment-2309366807 ## CI report: * 833d174116d4cf9cff4caa763214abfffa109ee5 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-35859) [flink-cdc] Fix: The assigner is not ready to offer finished split information, this should not be called

[

https://issues.apache.org/jira/browse/FLINK-35859?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876584#comment-17876584

]

Hongshun Wang commented on FLINK-35859:

---

[~pacinogong] the docs of AssignerStatus has already showed thay: only

INITIAL_ASSIGNING_FINISHED and

NEWLY_ADDED_ASSIGNING_FINISHED will be transformed to NEWLY_ADDED_ASSIGNING.

If status is NEWLY_ADDED_ASSIGNING_SNAPSHOT_FINISHED and continue , an

exception will be thrown in this case(sometime maybe more complex)

> [flink-cdc] Fix: The assigner is not ready to offer finished split

> information, this should not be called

> -

>

> Key: FLINK-35859

> URL: https://issues.apache.org/jira/browse/FLINK-35859

> Project: Flink

> Issue Type: Bug

> Components: Flink CDC

>Affects Versions: cdc-3.1.1

>Reporter: Hongshun Wang

>Assignee: Hongshun Wang

>Priority: Minor

> Fix For: cdc-3.2.0

>

>

> When use CDC with newly added table, an error occurs:

> {code:java}

> The assigner is not ready to offer finished split information, this should

> not be called. {code}

> It's because:

> 1. when stop then restart the job , the status is

> NEWLY_ADDED_ASSIGNING_SNAPSHOT_FINISHED.

>

> 2. Then Enumerator will send each reader with

> BinlogSplitUpdateRequestEvent to update binlog. (see

> org.apache.flink.cdc.connectors.mysql.source.enumerator.MySqlSourceEnumerator#syncWithReaders).

> 3. The Reader will suspend binlog reader then send

> BinlogSplitMetaRequestEvent to Enumerator.

> 4. The Enumerator found that some tables are not sent, an error will occur

> {code:java}

> private void sendBinlogMeta(int subTask, BinlogSplitMetaRequestEvent

> requestEvent) {

> // initialize once

> if (binlogSplitMeta == null) {

> final List finishedSnapshotSplitInfos =

> splitAssigner.getFinishedSplitInfos();

> if (finishedSnapshotSplitInfos.isEmpty()) {

> LOG.error(

> "The assigner offers empty finished split information,

> this should not happen");

> throw new FlinkRuntimeException(

> "The assigner offers empty finished split information,

> this should not happen");

> }

> binlogSplitMeta =

> Lists.partition(

> finishedSnapshotSplitInfos,

> sourceConfig.getSplitMetaGroupSize());

>}

> }{code}

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Comment Edited] (FLINK-35859) [flink-cdc] Fix: The assigner is not ready to offer finished split information, this should not be called

[

https://issues.apache.org/jira/browse/FLINK-35859?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876584#comment-17876584

]

Hongshun Wang edited comment on FLINK-35859 at 8/26/24 5:45 AM:

[~pacinogong] . the docs of AssignerStatus has already showed that: only

INITIAL_ASSIGNING_FINISHED and

NEWLY_ADDED_ASSIGNING_FINISHED will be transformed to NEWLY_ADDED_ASSIGNING.

If status is NEWLY_ADDED_ASSIGNING_SNAPSHOT_FINISHED and continue to read new

table , an exception will be thrown in this case(sometime maybe more complex)

was (Author: JIRAUSER298968):

[~pacinogong] . the docs of AssignerStatus has already showed that: only

INITIAL_ASSIGNING_FINISHED and

NEWLY_ADDED_ASSIGNING_FINISHED will be transformed to NEWLY_ADDED_ASSIGNING.

If status is NEWLY_ADDED_ASSIGNING_SNAPSHOT_FINISHED and continue , an

exception will be thrown in this case(sometime maybe more complex)

> [flink-cdc] Fix: The assigner is not ready to offer finished split

> information, this should not be called

> -

>

> Key: FLINK-35859

> URL: https://issues.apache.org/jira/browse/FLINK-35859

> Project: Flink

> Issue Type: Bug

> Components: Flink CDC

>Affects Versions: cdc-3.1.1

>Reporter: Hongshun Wang

>Assignee: Hongshun Wang

>Priority: Minor

> Fix For: cdc-3.2.0

>

>

> When use CDC with newly added table, an error occurs:

> {code:java}

> The assigner is not ready to offer finished split information, this should

> not be called. {code}

> It's because:

> 1. when stop then restart the job , the status is

> NEWLY_ADDED_ASSIGNING_SNAPSHOT_FINISHED.

>

> 2. Then Enumerator will send each reader with

> BinlogSplitUpdateRequestEvent to update binlog. (see

> org.apache.flink.cdc.connectors.mysql.source.enumerator.MySqlSourceEnumerator#syncWithReaders).

> 3. The Reader will suspend binlog reader then send

> BinlogSplitMetaRequestEvent to Enumerator.

> 4. The Enumerator found that some tables are not sent, an error will occur

> {code:java}

> private void sendBinlogMeta(int subTask, BinlogSplitMetaRequestEvent

> requestEvent) {

> // initialize once

> if (binlogSplitMeta == null) {

> final List finishedSnapshotSplitInfos =

> splitAssigner.getFinishedSplitInfos();

> if (finishedSnapshotSplitInfos.isEmpty()) {

> LOG.error(

> "The assigner offers empty finished split information,

> this should not happen");

> throw new FlinkRuntimeException(

> "The assigner offers empty finished split information,

> this should not happen");

> }

> binlogSplitMeta =

> Lists.partition(

> finishedSnapshotSplitInfos,

> sourceConfig.getSplitMetaGroupSize());

>}

> }{code}

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Comment Edited] (FLINK-35859) [flink-cdc] Fix: The assigner is not ready to offer finished split information, this should not be called

[

https://issues.apache.org/jira/browse/FLINK-35859?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17876584#comment-17876584

]

Hongshun Wang edited comment on FLINK-35859 at 8/26/24 5:45 AM:

[~pacinogong] . the docs of AssignerStatus has already showed that: only

INITIAL_ASSIGNING_FINISHED and

NEWLY_ADDED_ASSIGNING_FINISHED will be transformed to NEWLY_ADDED_ASSIGNING.

If status is NEWLY_ADDED_ASSIGNING_SNAPSHOT_FINISHED and continue , an

exception will be thrown in this case(sometime maybe more complex)

was (Author: JIRAUSER298968):

[~pacinogong] the docs of AssignerStatus has already showed thay: only

INITIAL_ASSIGNING_FINISHED and

NEWLY_ADDED_ASSIGNING_FINISHED will be transformed to NEWLY_ADDED_ASSIGNING.

If status is NEWLY_ADDED_ASSIGNING_SNAPSHOT_FINISHED and continue , an

exception will be thrown in this case(sometime maybe more complex)

> [flink-cdc] Fix: The assigner is not ready to offer finished split

> information, this should not be called

> -

>

> Key: FLINK-35859

> URL: https://issues.apache.org/jira/browse/FLINK-35859

> Project: Flink

> Issue Type: Bug

> Components: Flink CDC

>Affects Versions: cdc-3.1.1

>Reporter: Hongshun Wang

>Assignee: Hongshun Wang

>Priority: Minor

> Fix For: cdc-3.2.0

>

>

> When use CDC with newly added table, an error occurs:

> {code:java}

> The assigner is not ready to offer finished split information, this should

> not be called. {code}

> It's because:

> 1. when stop then restart the job , the status is

> NEWLY_ADDED_ASSIGNING_SNAPSHOT_FINISHED.

>

> 2. Then Enumerator will send each reader with

> BinlogSplitUpdateRequestEvent to update binlog. (see

> org.apache.flink.cdc.connectors.mysql.source.enumerator.MySqlSourceEnumerator#syncWithReaders).

> 3. The Reader will suspend binlog reader then send