[jira] [Commented] (KAFKA-10266) Fix connector configs in docs to mention the correct default value inherited from worker configs

[ https://issues.apache.org/jira/browse/KAFKA-10266?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17167681#comment-17167681 ] Luke Chen commented on KAFKA-10266: --- [PR: https://github.com/apache/kafka/pull/9104|https://github.com/apache/kafka/pull/9104] > Fix connector configs in docs to mention the correct default value inherited > from worker configs > > > Key: KAFKA-10266 > URL: https://issues.apache.org/jira/browse/KAFKA-10266 > Project: Kafka > Issue Type: Bug >Reporter: Konstantine Karantasis >Assignee: Luke Chen >Priority: Major > > > Example: > [https://kafka.apache.org/documentation/#header.converter] > has the correct default when it is mentioned as a worker property. > But under the section of source connector configs, it's default value is said > to be `null`. > Though that is correct in terms of implementation, it's confusing for users. > We should surface the correct defaults for configs that inherit (or otherwise > override) worker configs. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] ijuma commented on pull request #9008: KAFKA-9629 Use generated protocol for Fetch API

ijuma commented on pull request #9008: URL: https://github.com/apache/kafka/pull/9008#issuecomment-665828744 What were the throughput numbers? I assume you meant the connsumer perf test, not console consumer. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda merged pull request #9081: KAFKA-10309: KafkaProducer's sendOffsetsToTransaction should not block infinitively

abbccdda merged pull request #9081: URL: https://github.com/apache/kafka/pull/9081 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mumrah commented on pull request #9008: KAFKA-9629 Use generated protocol for Fetch API

mumrah commented on pull request #9008: URL: https://github.com/apache/kafka/pull/9008#issuecomment-665845747 @ijuma you're right, i meant the consumer perf test. I updated my comment to clarify This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda commented on a change in pull request #9001: KAFKA-10028: Implement write path for feature versioning system (KIP-584)

abbccdda commented on a change in pull request #9001:

URL: https://github.com/apache/kafka/pull/9001#discussion_r462453975

##

File path: clients/src/main/java/org/apache/kafka/clients/admin/Admin.java

##

@@ -1214,6 +1215,71 @@ default AlterClientQuotasResult

alterClientQuotas(Collection entries,

AlterClientQuotasOptions options);

+/**

+ * Describes finalized as well as supported features. By default, the

request is issued to any

+ * broker. It can be optionally directed only to the controller via

DescribeFeaturesOptions

+ * parameter. This is particularly useful if the user requires strongly

consistent reads of

+ * finalized features.

+ *

+ * The following exceptions can be anticipated when calling {@code get()}

on the future from the

+ * returned {@link DescribeFeaturesResult}:

+ *

+ * {@link org.apache.kafka.common.errors.TimeoutException}

+ * If the request timed out before the describe operation could

finish.

+ *

+ *

+ * @param options the options to use

+ *

+ * @return the {@link DescribeFeaturesResult} containing the

result

+ */

+DescribeFeaturesResult describeFeatures(DescribeFeaturesOptions options);

Review comment:

Note in the post-KIP-500 world, this feature could still work, but the

request must be redirected to the controller inherently on the broker side,

instead of sending it directly. So in the comment, we may try to phrase it to

convey the principal is that `the request must be handled by the controller`

instead of `the admin client must send this request to the controller`.

##

File path: clients/src/main/java/org/apache/kafka/clients/admin/Admin.java

##

@@ -1214,6 +1215,70 @@ default AlterClientQuotasResult

alterClientQuotas(Collection entries,

AlterClientQuotasOptions options);

+/**

+ * Describes finalized as well as supported features. By default, the

request is issued to any

+ * broker. It can be optionally directed only to the controller via

DescribeFeaturesOptions

+ * parameter. This is particularly useful if the user requires strongly

consistent reads of

+ * finalized features.

+ *

+ * The following exceptions can be anticipated when calling {@code get()}

on the future from the

+ * returned {@link DescribeFeaturesResult}:

+ *

+ * {@link org.apache.kafka.common.errors.TimeoutException}

+ * If the request timed out before the describe operation could

finish.

+ *

+ *

+ * @param options the options to use

+ *

+ * @return the {@link DescribeFeaturesResult} containing the

result

+ */

+DescribeFeaturesResult describeFeatures(DescribeFeaturesOptions options);

+

+/**

+ * Applies specified updates to finalized features. This operation is not

transactional so it

+ * may succeed for some features while fail for others.

+ *

+ * The API takes in a map of finalized feature name to {@link

FeatureUpdate} that need to be

Review comment:

nit: s/name/names

##

File path: core/src/main/scala/kafka/controller/KafkaController.scala

##

@@ -983,8 +1144,25 @@ class KafkaController(val config: KafkaConfig,

*/

private[controller] def sendUpdateMetadataRequest(brokers: Seq[Int],

partitions: Set[TopicPartition]): Unit = {

try {

+ val filteredBrokers = scala.collection.mutable.Set[Int]() ++ brokers

+ if (config.isFeatureVersioningEnabled) {

+def hasIncompatibleFeatures(broker: Broker): Boolean = {

+ val latestFinalizedFeatures = featureCache.get

+ if (latestFinalizedFeatures.isDefined) {

+BrokerFeatures.hasIncompatibleFeatures(broker.features,

latestFinalizedFeatures.get.features)

+ } else {

+false

+ }

+}

+controllerContext.liveOrShuttingDownBrokers.foreach(broker => {

+ if (filteredBrokers.contains(broker.id) &&

hasIncompatibleFeatures(broker)) {

Review comment:

I see, what would happen to a currently live broker if it couldn't get

any metadata update for a while, will it shut down itself?

##

File path:

clients/src/main/java/org/apache/kafka/clients/admin/DescribeFeaturesOptions.java

##

@@ -0,0 +1,48 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either exp

[GitHub] [kafka] abbccdda closed pull request #8940: KAFKA-10181: AlterConfig/IncrementalAlterConfig should route to the controller for non validation calls

abbccdda closed pull request #8940: URL: https://github.com/apache/kafka/pull/8940 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9008: KAFKA-9629 Use generated protocol for Fetch API

hachikuji commented on pull request #9008: URL: https://github.com/apache/kafka/pull/9008#issuecomment-665938546 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon opened a new pull request #9104: KAFKA-10266: Update the connector config header.converter

showuon opened a new pull request #9104: URL: https://github.com/apache/kafka/pull/9104 After my update, the original wrong statement will be removed. > By default, the SimpleHeaderConverter is used to . And it'll be replaced with the following, with hyperlink to the worker config's header.converter section. > By default, the value is null and the Connect config will be used. Also, update the default value to **Inherited from Connect config**  ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jsancio commented on a change in pull request #9050: KAFKA-10193: Add preemption for controller events that have callbacks

jsancio commented on a change in pull request #9050:

URL: https://github.com/apache/kafka/pull/9050#discussion_r462489390

##

File path: core/src/main/scala/kafka/controller/KafkaController.scala

##

@@ -2090,6 +2093,12 @@ case class ReplicaLeaderElection(

callback: ElectLeadersCallback = _ => {}

) extends ControllerEvent {

override def state: ControllerState = ControllerState.ManualLeaderBalance

+

+ override def preempt(): Unit = callback(

+partitionsFromAdminClientOpt.fold(Map.empty[TopicPartition,

Either[ApiError, Int]]) { partitions =>

Review comment:

Yeah. The value returned by the `fold` is passed to `callback`.

`foreach` would return `Unit`.

##

File path: core/src/main/scala/kafka/controller/ControllerEventManager.scala

##

@@ -140,6 +143,9 @@ class ControllerEventManager(controllerId: Int,

}

}

+ // for testing

+ private[controller] def setControllerEventThread(thread:

ControllerEventThread): Unit = this.thread = thread

Review comment:

We can remove this since we have `private[controller] var thread` with

the same visibility.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] apovzner commented on a change in pull request #9072: KAFKA-10162; Make the rate based quota behave more like a Token Bucket (KIP-599, Part III)

apovzner commented on a change in pull request #9072:

URL: https://github.com/apache/kafka/pull/9072#discussion_r462645570

##

File path:

clients/src/main/java/org/apache/kafka/common/metrics/stats/TokenBucket.java

##

@@ -0,0 +1,179 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.common.metrics.stats;

+

+import static java.util.concurrent.TimeUnit.MILLISECONDS;

+

+import java.util.List;

+import java.util.concurrent.TimeUnit;

+import org.apache.kafka.common.metrics.MetricConfig;

+

+/**

+ * The {@link TokenBucket} is a {@link SampledStat} implementing a token

bucket that can be used

+ * in conjunction with a {@link Rate} to enforce a quota.

+ *

+ * A token bucket accumulates tokens with a constant rate R, one token per 1/R

second, up to a

+ * maximum burst size B. The burst size essentially means that we keep

permission to do a unit of

+ * work for up to B / R seconds.

+ *

+ * The {@link TokenBucket} adapts this to fit within a {@link SampledStat}. It

accumulates tokens

+ * in chunks of Q units by default (sample length * quota) instead of one

token every 1/R second

+ * and expires in chunks of Q units too (when the oldest sample is expired).

+ *

+ * Internally, we achieve this behavior by not completing the current sample

until we fill that

+ * sample up to Q (used all credits). Samples are filled up one after the

others until the maximum

+ * number of samples is reached. If it is not possible to created a new

sample, we accumulate in

+ * the last one until a new one can be created. The over used credits are

spilled over to the new

+ * sample at when it is created. Every time a sample is purged, Q credits are

made available.

+ *

+ * It is important to note that the maximum burst is not enforced in the class

and depends on

+ * how the quota is enforced in the {@link Rate}.

+ */

+public class TokenBucket extends SampledStat {

+

+private final TimeUnit unit;

+

+/**

+ * Instantiates a new TokenBucket that works by default with a Quota

{@link MetricConfig#quota()}

+ * in {@link TimeUnit#SECONDS}.

+ */

+public TokenBucket() {

+this(TimeUnit.SECONDS);

+}

+

+/**

+ * Instantiates a new TokenBucket that works with the provided time unit.

+ *

+ * @param unit The time unit of the Quota {@link MetricConfig#quota()}

+ */

+public TokenBucket(TimeUnit unit) {

+super(0);

+this.unit = unit;

+}

+

+@Override

+public void record(MetricConfig config, double value, long timeMs) {

Review comment:

@junrao Regarding "With this change, if we record a large value, the

observed effect of the value could last much longer than the number of samples.

" -- This will not happen with this approach. If we record a very large value,

we never move to the bucket with timestamp > current timestamp (of the

recording time). This approach can only add the value to older buckets, which

did not expire, but never to the buckets "in the future".

For example, if we only have 2 samples, and perSampleQuota = 5, and say we

already filled in both buckets up to quota: [5, 5]. If new requests arrive but

the timestamp did not move past the last bucket, we are going to be adding this

value to the last bucket, for example getting to [5, 20] if we recorded 15. If

the next recording happens after the time moved passed the last bucket, say we

record 3, then buckets will look like [20, 3].

##

File path:

clients/src/main/java/org/apache/kafka/common/metrics/stats/TokenBucket.java

##

@@ -0,0 +1,179 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOU

[GitHub] [kafka] guozhangwang commented on pull request #9095: KAFKA-10321: fix infinite blocking for global stream thread startup

guozhangwang commented on pull request #9095: URL: https://github.com/apache/kafka/pull/9095#issuecomment-665967044 LGTM. Please feel free to merge and cherry-pick. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on pull request #9104: KAFKA-10266: Update the connector config header.converter

showuon commented on pull request #9104: URL: https://github.com/apache/kafka/pull/9104#issuecomment-666170759 @kkonstantine , could you help review this PR to correct the documentation. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda merged pull request #9095: KAFKA-10321: fix infinite blocking for global stream thread startup

abbccdda merged pull request #9095: URL: https://github.com/apache/kafka/pull/9095 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mumrah edited a comment on pull request #9008: KAFKA-9629 Use generated protocol for Fetch API

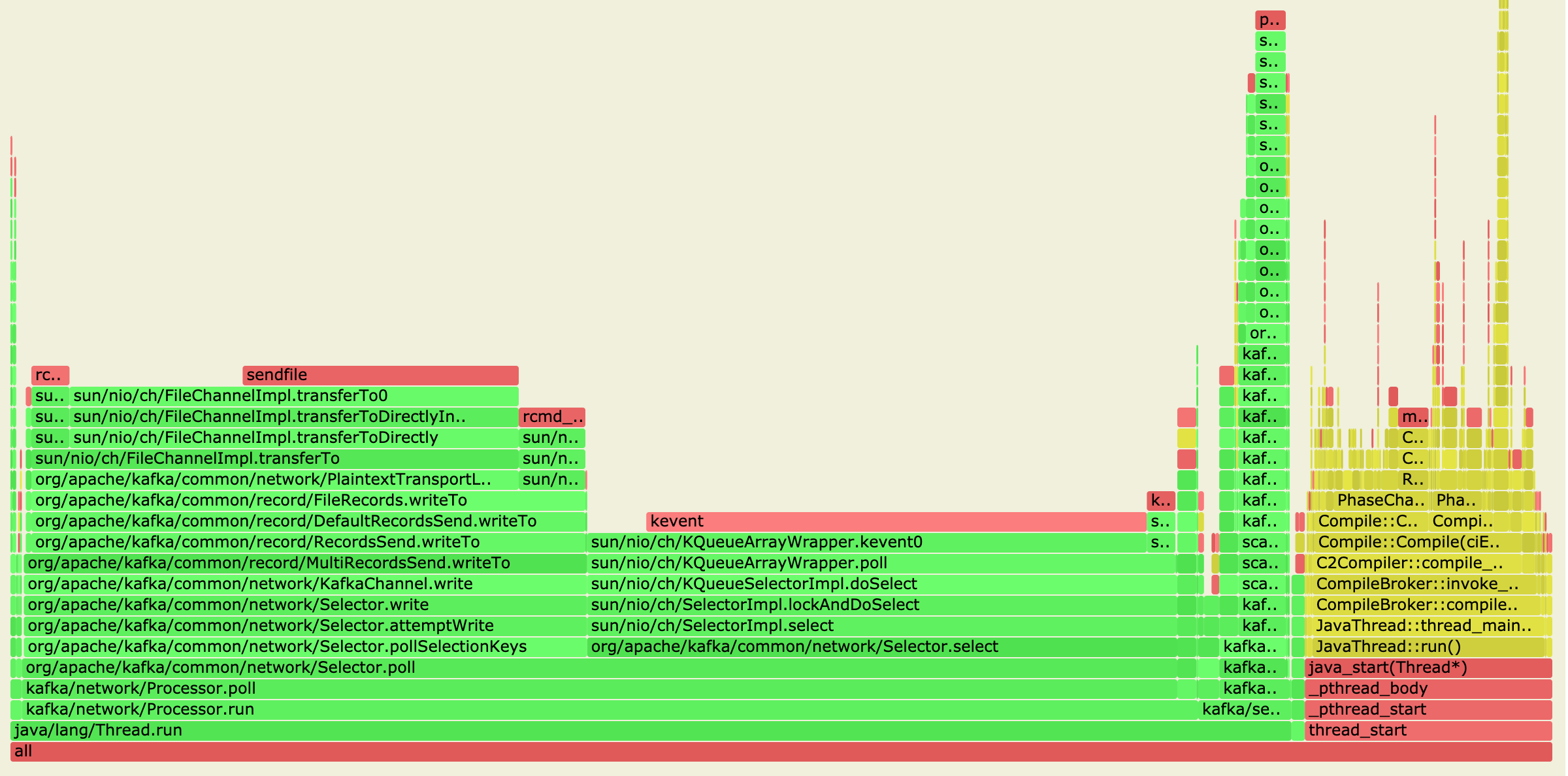

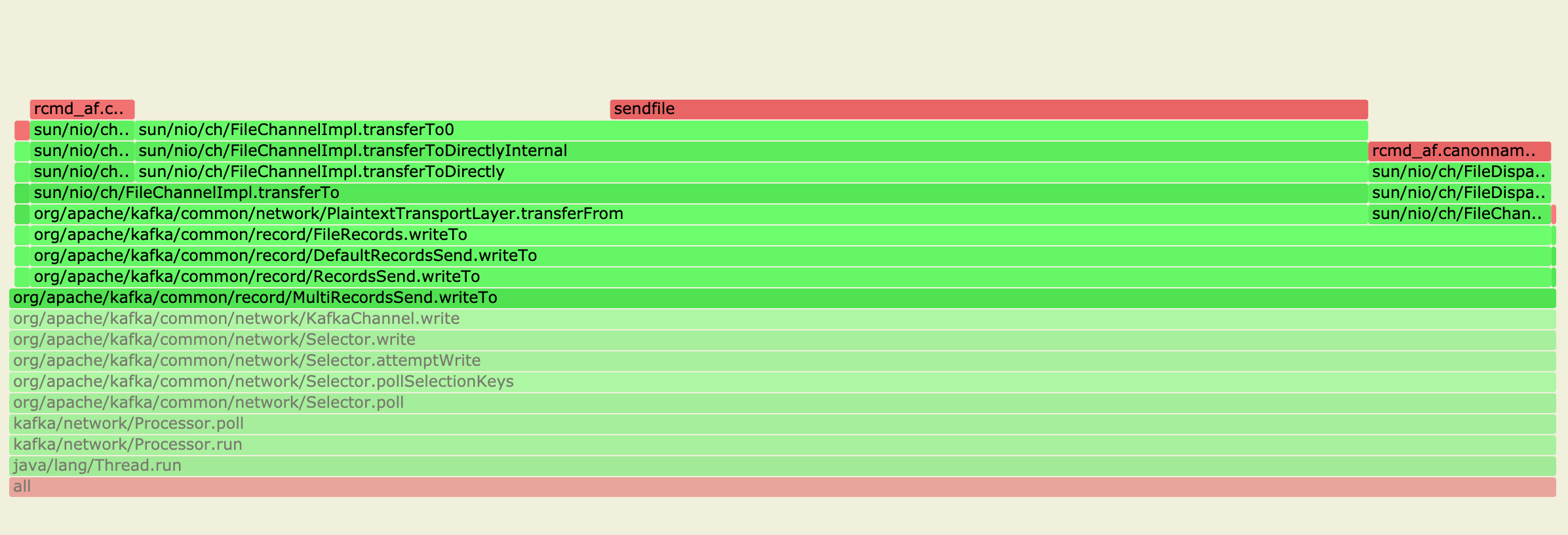

mumrah edited a comment on pull request #9008: URL: https://github.com/apache/kafka/pull/9008#issuecomment-665795947 I ran the consumer perf test (at @hachikuji's suggestion) and took a profile. Throughput was around 500MB/s on trunk and on this branch  Zoomed in a bit on the records part:  This was with only a handful of partitions on a single broker (on my laptop), but it confirms that the new FetchResponse serialization is hitting the same sendfile path as the previous code. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda merged pull request #9012: KAFKA-10270: A broker to controller channel manager

abbccdda merged pull request #9012: URL: https://github.com/apache/kafka/pull/9012 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kkonstantine commented on pull request #8854: KAFKA-10146, KAFKA-9066: Retain metrics for failed tasks (#8502)

kkonstantine commented on pull request #8854: URL: https://github.com/apache/kafka/pull/8854#issuecomment-665952918 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda commented on pull request #9095: KAFKA-10321: fix infinite blocking for global stream thread startup

abbccdda commented on pull request #9095: URL: https://github.com/apache/kafka/pull/9095#issuecomment-665999727 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda opened a new pull request #9103: Add redirection for (Incremental)AlterConfig

abbccdda opened a new pull request #9103: URL: https://github.com/apache/kafka/pull/9103 *More detailed description of your change, if necessary. The PR title and PR message become the squashed commit message, so use a separate comment to ping reviewers.* *Summary of testing strategy (including rationale) for the feature or bug fix. Unit and/or integration tests are expected for any behaviour change and system tests should be considered for larger changes.* ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda commented on pull request #8940: KAFKA-10181: AlterConfig/IncrementalAlterConfig should route to the controller for non validation calls

abbccdda commented on pull request #8940: URL: https://github.com/apache/kafka/pull/8940#issuecomment-666091752 Will close this PR as the KIP-590 requirement changes This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mumrah opened a new pull request #9100: Add AlterISR RPC and use it for ISR modifications

mumrah opened a new pull request #9100: URL: https://github.com/apache/kafka/pull/9100 WIP This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] junrao commented on a change in pull request #9072: KAFKA-10162; Make the rate based quota behave more like a Token Bucket (KIP-599, Part III)

junrao commented on a change in pull request #9072:

URL: https://github.com/apache/kafka/pull/9072#discussion_r462624834

##

File path:

clients/src/main/java/org/apache/kafka/common/metrics/stats/TokenBucket.java

##

@@ -0,0 +1,179 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.common.metrics.stats;

+

+import static java.util.concurrent.TimeUnit.MILLISECONDS;

+

+import java.util.List;

+import java.util.concurrent.TimeUnit;

+import org.apache.kafka.common.metrics.MetricConfig;

+

+/**

+ * The {@link TokenBucket} is a {@link SampledStat} implementing a token

bucket that can be used

+ * in conjunction with a {@link Rate} to enforce a quota.

+ *

+ * A token bucket accumulates tokens with a constant rate R, one token per 1/R

second, up to a

+ * maximum burst size B. The burst size essentially means that we keep

permission to do a unit of

+ * work for up to B / R seconds.

+ *

+ * The {@link TokenBucket} adapts this to fit within a {@link SampledStat}. It

accumulates tokens

+ * in chunks of Q units by default (sample length * quota) instead of one

token every 1/R second

+ * and expires in chunks of Q units too (when the oldest sample is expired).

+ *

+ * Internally, we achieve this behavior by not completing the current sample

until we fill that

+ * sample up to Q (used all credits). Samples are filled up one after the

others until the maximum

+ * number of samples is reached. If it is not possible to created a new

sample, we accumulate in

+ * the last one until a new one can be created. The over used credits are

spilled over to the new

+ * sample at when it is created. Every time a sample is purged, Q credits are

made available.

+ *

+ * It is important to note that the maximum burst is not enforced in the class

and depends on

+ * how the quota is enforced in the {@link Rate}.

+ */

+public class TokenBucket extends SampledStat {

+

+private final TimeUnit unit;

+

+/**

+ * Instantiates a new TokenBucket that works by default with a Quota

{@link MetricConfig#quota()}

+ * in {@link TimeUnit#SECONDS}.

+ */

+public TokenBucket() {

+this(TimeUnit.SECONDS);

+}

+

+/**

+ * Instantiates a new TokenBucket that works with the provided time unit.

+ *

+ * @param unit The time unit of the Quota {@link MetricConfig#quota()}

+ */

+public TokenBucket(TimeUnit unit) {

+super(0);

+this.unit = unit;

+}

+

+@Override

+public void record(MetricConfig config, double value, long timeMs) {

Review comment:

Just a high level comment on the approach. This approach tries to spread

a recorded value to multiple samples if the sample level quota is exceeded.

While this matches the token bucket behavior for quota, it changes the behavior

when we observe the value of the measurable. Currently, we record a full value

in the current Sample. When we observe the value of the measurable, the effect

of this value will last for the number of samples. After which, this value

rolls out and no longer impacts the observed measurable. With this change, if

we record a large value, the observed effect of the value could last much

longer than the number of samples. For example, if we have 10 1-sec samples

each with a quota of 1 and we record a value of 1000, the effect of this value

will last for 1000 secs instead of 10 secs from the observability perspective.

This may cause some confusion since we don't quite know when an event actually

occurred.

I was thinking about an alternative approach that decouples the

recording/observation of the measurable from quota calculation. Here is the

rough idea. We create a customized SampledStat that records new values in a

single sample as it is. In addition, it maintains an accumulated available

credit. As time advances, we add new credits based on the quota rate, capped by

samples * perSampleQuota. When a value is recorded, we deduct the value from

the credit and allow the credit to go below 0. We change the

Sensor.checkQuotas() logic such that if the customized SampledStat is used, we

throw QuotaViolationException if credit is < 0. This preserves the current

behavior for observability, but

[GitHub] [kafka] dielhennr opened a new pull request #9101: KAFKA-10325: KIP-649 implementation

dielhennr opened a new pull request #9101: URL: https://github.com/apache/kafka/pull/9101 This is the initial implementation of [KIP-649](https://cwiki.apache.org/confluence/pages/resumedraft.action?draftId=158869615&draftShareId=c349fbe8-7aa8-4fde-a8e4-8d719cda3b9a&;) which implements dynamic client configuration and this is still a work in progress. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] guozhangwang commented on a change in pull request #9095: KAFKA-10321: fix infinite blocking for global stream thread startup

guozhangwang commented on a change in pull request #9095:

URL: https://github.com/apache/kafka/pull/9095#discussion_r462589015

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/GlobalStreamThread.java

##

@@ -173,6 +178,18 @@ public boolean stillRunning() {

}

}

+public boolean inErrorState() {

+synchronized (stateLock) {

+return state.inErrorState();

+}

+}

+

+public boolean stillInitializing() {

+synchronized (stateLock) {

+return !state.isRunning() && !state.inErrorState();

Review comment:

Why not just `state.CREATED` as we are excluding three out of four

states here?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-7540) Flaky Test ConsumerBounceTest#testClose

[

https://issues.apache.org/jira/browse/KAFKA-7540?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17167720#comment-17167720

]

Bruno Cadonna commented on KAFKA-7540:

--

https://builds.apache.org/job/kafka-pr-jdk11-scala2.13/7618

{code:java}

20:08:12 kafka.api.ConsumerBounceTest > testClose FAILED

20:08:12 java.lang.AssertionError: Assignment did not complete on time

20:08:12 at org.junit.Assert.fail(Assert.java:89)

20:08:12 at org.junit.Assert.assertTrue(Assert.java:42)

20:08:12 at

kafka.api.ConsumerBounceTest.checkClosedState(ConsumerBounceTest.scala:486)

20:08:12 at

kafka.api.ConsumerBounceTest.checkCloseWithCoordinatorFailure(ConsumerBounceTest.scala:257)

20:08:12 at

kafka.api.ConsumerBounceTest.testClose(ConsumerBounceTest.scala:220)

{code}

> Flaky Test ConsumerBounceTest#testClose

> ---

>

> Key: KAFKA-7540

> URL: https://issues.apache.org/jira/browse/KAFKA-7540

> Project: Kafka

> Issue Type: Bug

> Components: clients, consumer, unit tests

>Affects Versions: 2.2.0

>Reporter: John Roesler

>Assignee: Jason Gustafson

>Priority: Critical

> Labels: flaky-test

> Fix For: 2.7.0, 2.6.1

>

>

> Observed on Java 8:

> [https://builds.apache.org/job/kafka-pr-jdk8-scala2.11/17314/testReport/junit/kafka.api/ConsumerBounceTest/testClose/]

>

> Stacktrace:

> {noformat}

> java.lang.ArrayIndexOutOfBoundsException: -1

> at

> kafka.integration.KafkaServerTestHarness.killBroker(KafkaServerTestHarness.scala:146)

> at

> kafka.api.ConsumerBounceTest.checkCloseWithCoordinatorFailure(ConsumerBounceTest.scala:238)

> at kafka.api.ConsumerBounceTest.testClose(ConsumerBounceTest.scala:211)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:363)

> at

> org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.runTestClass(JUnitTestClassExecutor.java:106)

> at

> org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.execute(JUnitTestClassExecutor.java:58)

> at

> org.gradle.api.internal.tasks.testing.junit.JUnitTestClassExecutor.execute(JUnitTestClassExecutor.java:38)

> at

> org.gradle.api.internal.tasks.testing.junit.AbstractJUnitTestClassProcessor.processTestClass(AbstractJUnitTestClassProcessor.java:66)

> at

> org.gradle.api.internal.tasks.testing.SuiteTestClassProcessor.processTestClass(SuiteTestClassProcessor.java:51)

> at sun.reflect.GeneratedMethodAccessor12.invoke(Unknown Source)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.gradle.internal.dispatch.ReflectionDispatch.dispatch(ReflectionDispatch.java:35)

> at

> org.gradle.internal.dispatch.ReflectionDispatch.dispatch(ReflectionDispatch.java:24)

> at

> org.gradle.internal.dispatch.ContextClassLoaderDispatch.dispatch(ContextClassLoaderDispatch.java:32)

> at

> org.gradle.internal.dispatch.ProxyDispatchAdapter$DispatchingInvocationHandler.invoke(ProxyDispatchAda

[jira] [Commented] (KAFKA-9013) Flaky Test MirrorConnectorsIntegrationTest#testReplication

[

https://issues.apache.org/jira/browse/KAFKA-9013?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17167726#comment-17167726

]

Bruno Cadonna commented on KAFKA-9013:

--

https://builds.apache.org/job/kafka-pr-jdk8-scala2.12/3608

{code:java}

java.lang.RuntimeException: Could not find enough records. found 0, expected 100

at

org.apache.kafka.connect.util.clusters.EmbeddedKafkaCluster.consume(EmbeddedKafkaCluster.java:435)

at

org.apache.kafka.connect.mirror.MirrorConnectorsIntegrationTest.testReplication(MirrorConnectorsIntegrationTest.java:221)

{code}

> Flaky Test MirrorConnectorsIntegrationTest#testReplication

> --

>

> Key: KAFKA-9013

> URL: https://issues.apache.org/jira/browse/KAFKA-9013

> Project: Kafka

> Issue Type: Bug

> Components: KafkaConnect

>Reporter: Bruno Cadonna

>Priority: Major

> Labels: flaky-test

>

> h1. Stacktrace:

> {code:java}

> java.lang.AssertionError: Condition not met within timeout 2. Offsets not

> translated downstream to primary cluster.

> at org.apache.kafka.test.TestUtils.waitForCondition(TestUtils.java:377)

> at org.apache.kafka.test.TestUtils.waitForCondition(TestUtils.java:354)

> at

> org.apache.kafka.connect.mirror.MirrorConnectorsIntegrationTest.testReplication(MirrorConnectorsIntegrationTest.java:239)

> {code}

> h1. Standard Error

> {code}

> Standard Error

> Oct 09, 2019 11:32:00 PM org.glassfish.jersey.internal.inject.Providers

> checkProviderRuntime

> WARNING: A provider

> org.apache.kafka.connect.runtime.rest.resources.ConnectorPluginsResource

> registered in SERVER runtime does not implement any provider interfaces

> applicable in the SERVER runtime. Due to constraint configuration problems

> the provider

> org.apache.kafka.connect.runtime.rest.resources.ConnectorPluginsResource will

> be ignored.

> Oct 09, 2019 11:32:00 PM org.glassfish.jersey.internal.inject.Providers

> checkProviderRuntime

> WARNING: A provider

> org.apache.kafka.connect.runtime.rest.resources.LoggingResource registered in

> SERVER runtime does not implement any provider interfaces applicable in the

> SERVER runtime. Due to constraint configuration problems the provider

> org.apache.kafka.connect.runtime.rest.resources.LoggingResource will be

> ignored.

> Oct 09, 2019 11:32:00 PM org.glassfish.jersey.internal.inject.Providers

> checkProviderRuntime

> WARNING: A provider

> org.apache.kafka.connect.runtime.rest.resources.ConnectorsResource registered

> in SERVER runtime does not implement any provider interfaces applicable in

> the SERVER runtime. Due to constraint configuration problems the provider

> org.apache.kafka.connect.runtime.rest.resources.ConnectorsResource will be

> ignored.

> Oct 09, 2019 11:32:00 PM org.glassfish.jersey.internal.inject.Providers

> checkProviderRuntime

> WARNING: A provider

> org.apache.kafka.connect.runtime.rest.resources.RootResource registered in

> SERVER runtime does not implement any provider interfaces applicable in the

> SERVER runtime. Due to constraint configuration problems the provider

> org.apache.kafka.connect.runtime.rest.resources.RootResource will be ignored.

> Oct 09, 2019 11:32:01 PM org.glassfish.jersey.internal.Errors logErrors

> WARNING: The following warnings have been detected: WARNING: The

> (sub)resource method listLoggers in

> org.apache.kafka.connect.runtime.rest.resources.LoggingResource contains

> empty path annotation.

> WARNING: The (sub)resource method listConnectors in

> org.apache.kafka.connect.runtime.rest.resources.ConnectorsResource contains

> empty path annotation.

> WARNING: The (sub)resource method createConnector in

> org.apache.kafka.connect.runtime.rest.resources.ConnectorsResource contains

> empty path annotation.

> WARNING: The (sub)resource method listConnectorPlugins in

> org.apache.kafka.connect.runtime.rest.resources.ConnectorPluginsResource

> contains empty path annotation.

> WARNING: The (sub)resource method serverInfo in

> org.apache.kafka.connect.runtime.rest.resources.RootResource contains empty

> path annotation.

> Oct 09, 2019 11:32:02 PM org.glassfish.jersey.internal.inject.Providers

> checkProviderRuntime

> WARNING: A provider

> org.apache.kafka.connect.runtime.rest.resources.ConnectorPluginsResource

> registered in SERVER runtime does not implement any provider interfaces

> applicable in the SERVER runtime. Due to constraint configuration problems

> the provider

> org.apache.kafka.connect.runtime.rest.resources.ConnectorPluginsResource will

> be ignored.

> Oct 09, 2019 11:32:02 PM org.glassfish.jersey.internal.inject.Providers

> checkProviderRuntime

> WARNING: A provider

> org.apache.kafka.connect.runtime.rest.resources.ConnectorsResource registered

> in SERVER runtime does not implemen

[jira] [Commented] (KAFKA-10255) Fix flaky testOneWayReplicationWithAutorOffsetSync1 test

[

https://issues.apache.org/jira/browse/KAFKA-10255?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17167727#comment-17167727

]

Bruno Cadonna commented on KAFKA-10255:

---

https://builds.apache.org/job/kafka-pr-jdk8-scala2.12/3608

{code:java}

java.lang.AssertionError: consumer record size is not zero expected:<0> but

was:<4>

{code}

> Fix flaky testOneWayReplicationWithAutorOffsetSync1 test

>

>

> Key: KAFKA-10255

> URL: https://issues.apache.org/jira/browse/KAFKA-10255

> Project: Kafka

> Issue Type: Test

>Reporter: Luke Chen

>Assignee: Luke Chen

>Priority: Major

>

> https://builds.apache.org/blue/rest/organizations/jenkins/pipelines/kafka-trunk-jdk14/runs/279/log/?start=0

> org.apache.kafka.connect.mirror.MirrorConnectorsIntegrationTest >

> testOneWayReplicationWithAutorOffsetSync1 STARTED

> org.apache.kafka.connect.mirror.MirrorConnectorsIntegrationTest.testOneWayReplicationWithAutorOffsetSync1

> failed, log available in

> /home/jenkins/jenkins-slave/workspace/kafka-trunk-jdk14/connect/mirror/build/reports/testOutput/org.apache.kafka.connect.mirror.MirrorConnectorsIntegrationTest.testOneWayReplicationWithAutorOffsetSync1.test.stdout

> org.apache.kafka.connect.mirror.MirrorConnectorsIntegrationTest >

> testOneWayReplicationWithAutorOffsetSync1 FAILED

> java.lang.AssertionError: consumer record size is not zero expected:<0> but

> was:<2>

> at org.junit.Assert.fail(Assert.java:89)

> at org.junit.Assert.failNotEquals(Assert.java:835)

> at org.junit.Assert.assertEquals(Assert.java:647)

> at

> org.apache.kafka.connect.mirror.MirrorConnectorsIntegrationTest.testOneWayReplicationWithAutorOffsetSync1(MirrorConnectorsIntegrationTest.java:349)

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] cadonna commented on pull request #9087: HOTFIX: Set session timeout and heartbeat interval to default to decrease flakiness

cadonna commented on pull request #9087: URL: https://github.com/apache/kafka/pull/9087#issuecomment-666202514 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #9087: HOTFIX: Set session timeout and heartbeat interval to default to decrease flakiness

chia7712 commented on pull request #9087: URL: https://github.com/apache/kafka/pull/9087#issuecomment-666205937 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dajac commented on a change in pull request #9072: KAFKA-10162; Make the rate based quota behave more like a Token Bucket (KIP-599, Part III)

dajac commented on a change in pull request #9072:

URL: https://github.com/apache/kafka/pull/9072#discussion_r462846252

##

File path:

clients/src/main/java/org/apache/kafka/common/metrics/stats/TokenBucket.java

##

@@ -0,0 +1,179 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.common.metrics.stats;

+

+import static java.util.concurrent.TimeUnit.MILLISECONDS;

+

+import java.util.List;

+import java.util.concurrent.TimeUnit;

+import org.apache.kafka.common.metrics.MetricConfig;

+

+/**

+ * The {@link TokenBucket} is a {@link SampledStat} implementing a token

bucket that can be used

+ * in conjunction with a {@link Rate} to enforce a quota.

+ *

+ * A token bucket accumulates tokens with a constant rate R, one token per 1/R

second, up to a

+ * maximum burst size B. The burst size essentially means that we keep

permission to do a unit of

+ * work for up to B / R seconds.

+ *

+ * The {@link TokenBucket} adapts this to fit within a {@link SampledStat}. It

accumulates tokens

+ * in chunks of Q units by default (sample length * quota) instead of one

token every 1/R second

+ * and expires in chunks of Q units too (when the oldest sample is expired).

+ *

+ * Internally, we achieve this behavior by not completing the current sample

until we fill that

+ * sample up to Q (used all credits). Samples are filled up one after the

others until the maximum

+ * number of samples is reached. If it is not possible to created a new

sample, we accumulate in

+ * the last one until a new one can be created. The over used credits are

spilled over to the new

+ * sample at when it is created. Every time a sample is purged, Q credits are

made available.

+ *

+ * It is important to note that the maximum burst is not enforced in the class

and depends on

+ * how the quota is enforced in the {@link Rate}.

+ */

+public class TokenBucket extends SampledStat {

+

+private final TimeUnit unit;

+

+/**

+ * Instantiates a new TokenBucket that works by default with a Quota

{@link MetricConfig#quota()}

+ * in {@link TimeUnit#SECONDS}.

+ */

+public TokenBucket() {

+this(TimeUnit.SECONDS);

+}

+

+/**

+ * Instantiates a new TokenBucket that works with the provided time unit.

+ *

+ * @param unit The time unit of the Quota {@link MetricConfig#quota()}

+ */

+public TokenBucket(TimeUnit unit) {

+super(0);

+this.unit = unit;

+}

+

+@Override

+public void record(MetricConfig config, double value, long timeMs) {

Review comment:

@junrao Thanks for your comment. Your observation is correct. This is

because we spill over the amount above the quota into any newly created sample.

So @apovzner's example would actually end up like this: [5, 18]. @apovzner I

think that you have mentioned once another way to do this that may not require

to spill over the remainder.

Your remark regarding the observability is right. This is true as soon as we

deviate from a sampled rate, regardless of the implementation that we may

choose eventually. Separating concerns is indeed a good idea if we can.

Regarding your suggested approach, I think that may works. As @apovzner said,

we will need to change the way the throttle time is computed so that it does

not rely on the rate but on the amount of credits. Another concern is that the

credits won't be observable neither so we may keep the correct rate but still

may not understand why one is throttled or not if we can't observe the amount

of credits used/left.

If we want to separate concerns, having both a sampled Rate and a Token

Bucket working side by side would be the best. The Rate would continue to

provide the actual Rate as of today and the Token Bucket would be used to

enforced the quota. We could expose the amount of credits in the bucket via a

new metric. One way to achieve this would be to have two MeasurableStats within

the Sensor: the Rate and the Token Bucket. We would still need to have an

adapted version of the Token Bucket that works with our current configs (quota,

samples, window). I implemented one to compare with the current approach few

weeks ago:

https://github.com/dajac/kafka/blob/1

[GitHub] [kafka] mimaison commented on pull request #9007: KAFKA-10120: Deprecate DescribeLogDirsResult.all() and .values()

mimaison commented on pull request #9007: URL: https://github.com/apache/kafka/pull/9007#issuecomment-666239450 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mimaison commented on pull request #9007: KAFKA-10120: Deprecate DescribeLogDirsResult.all() and .values()

mimaison commented on pull request #9007: URL: https://github.com/apache/kafka/pull/9007#issuecomment-666256788 ok to test This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna commented on a change in pull request #9094: KAFKA-10054: KIP-613, add TRACE-level e2e latency metrics

cadonna commented on a change in pull request #9094: URL: https://github.com/apache/kafka/pull/9094#discussion_r462881933 ## File path: streams/src/test/java/org/apache/kafka/streams/integration/MetricsIntegrationTest.java ## @@ -668,6 +671,9 @@ private void checkKeyValueStoreMetrics(final String group0100To24, checkMetricByName(listMetricStore, SUPPRESSION_BUFFER_SIZE_CURRENT, 0); checkMetricByName(listMetricStore, SUPPRESSION_BUFFER_SIZE_AVG, 0); checkMetricByName(listMetricStore, SUPPRESSION_BUFFER_SIZE_MAX, 0); +checkMetricByName(listMetricStore, RECORD_E2E_LATENCY_AVG, expectedNumberofE2ELatencyMetrics); +checkMetricByName(listMetricStore, RECORD_E2E_LATENCY_MIN, expectedNumberofE2ELatencyMetrics); +checkMetricByName(listMetricStore, RECORD_E2E_LATENCY_MAX, expectedNumberofE2ELatencyMetrics); Review comment: Sorry, I did a mistake here. We should not give new metrics old groups. I think to fix this test you need to adapt the filter on line 618 to let all metrics with groups that relate to KV state stores pass. See `checkWindowStoreAndSuppressionBufferMetrics()` for an example. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna commented on a change in pull request #9094: KAFKA-10054: KIP-613, add TRACE-level e2e latency metrics

cadonna commented on a change in pull request #9094:

URL: https://github.com/apache/kafka/pull/9094#discussion_r462882926

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/internals/metrics/StateStoreMetrics.java

##

@@ -443,6 +447,25 @@ public static Sensor suppressionBufferSizeSensor(final

String threadId,

);

}

+public static Sensor e2ELatencySensor(final String threadId,

+ final String taskId,

+ final String storeType,

+ final String storeName,

+ final StreamsMetricsImpl

streamsMetrics) {

+final Sensor sensor = streamsMetrics.storeLevelSensor(threadId,

taskId, storeName, RECORD_E2E_LATENCY, RecordingLevel.TRACE);

+final Map tagMap =

streamsMetrics.storeLevelTagMap(threadId, taskId, storeType, storeName);

+addAvgAndMinAndMaxToSensor(

+sensor,

+STATE_STORE_LEVEL_GROUP,

Review comment:

I just realized that we should not put new metrics into old groups. Your

code is fine. Do not use `stateStoreLevelGroup()`! Sorry for the confusion.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cadonna commented on a change in pull request #9094: KAFKA-10054: KIP-613, add TRACE-level e2e latency metrics

cadonna commented on a change in pull request #9094:

URL: https://github.com/apache/kafka/pull/9094#discussion_r462883347

##

File path:

streams/src/test/java/org/apache/kafka/streams/state/internals/metrics/StateStoreMetricsTest.java

##

@@ -327,6 +327,38 @@ public void shouldGetExpiredWindowRecordDropSensor() {

assertThat(sensor, is(expectedSensor));

}

+@Test

+public void shouldGetRecordE2ELatencySensor() {

+final String metricName = "record-e2e-latency";

+

+final String e2eLatencyDescription =

+"end-to-end latency of a record, measuring by comparing the record

timestamp with the "

++ "system time when it has been fully processed by the node";

+final String descriptionOfAvg = "The average " + e2eLatencyDescription;

+final String descriptionOfMin = "The minimum " + e2eLatencyDescription;

+final String descriptionOfMax = "The maximum " + e2eLatencyDescription;

+

+expect(streamsMetrics.storeLevelSensor(THREAD_ID, TASK_ID, STORE_NAME,

metricName, RecordingLevel.TRACE))

+.andReturn(expectedSensor);

+expect(streamsMetrics.storeLevelTagMap(THREAD_ID, TASK_ID, STORE_TYPE,

STORE_NAME)).andReturn(storeTagMap);

+StreamsMetricsImpl.addAvgAndMinAndMaxToSensor(

+expectedSensor,

+STORE_LEVEL_GROUP,

Review comment:

I just realized that we should not put new metrics into old groups. Your

code is fine. Do not use instance variable `storeLevelGroup`! Sorry for the

confusion.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] mimaison commented on pull request #8295: KAFKA-9627: Replace ListOffset request/response with automated protocol

mimaison commented on pull request #8295: URL: https://github.com/apache/kafka/pull/8295#issuecomment-666310163 @dajac Ok, I've removed the `Optional` to `Option` changes This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna commented on pull request #9087: HOTFIX: Set session timeout and heartbeat interval to default to decrease flakiness

cadonna commented on pull request #9087: URL: https://github.com/apache/kafka/pull/9087#issuecomment-666311943 Java 14 failed due to the following failing test: ``` org.apache.kafka.streams.integration.EosBetaUpgradeIntegrationTest > shouldUpgradeFromEosAlphaToEosBeta[true] ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-10327) Make flush after some count of putted records in SinkTask

Pavel Kuznetsov created KAFKA-10327: --- Summary: Make flush after some count of putted records in SinkTask Key: KAFKA-10327 URL: https://issues.apache.org/jira/browse/KAFKA-10327 Project: Kafka Issue Type: Improvement Components: KafkaConnect Affects Versions: 2.5.0 Reporter: Pavel Kuznetsov In current version of kafka connect all records accumulated with SinkTask.put method are flushed to target system on a time-based manner. So data is flushed and offsets are committed every offset.flush.timeout.ms (default is 6) ms. But you can't control the number of messages you receive from Kafka between two flushes. It may cause out of memory errors, because in-memory buffer may grow a lot. I suggest to add out of box support of count-based flush to kafka connect. It requires new configuration parameter (offset.flush.count, for example). Number of records sent to SinkTask.put should be counted, and if these amount is greater than offset.flush.count's value, SinkTask.flush is called and offsets are committed. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] chia7712 commented on pull request #9087: HOTFIX: Set session timeout and heartbeat interval to default to decrease flakiness

chia7712 commented on pull request #9087: URL: https://github.com/apache/kafka/pull/9087#issuecomment-666325276 ```EosBetaUpgradeIntegrationTest``` pass on my local. retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #9102: KAFKA-10326 Both serializer and deserializer should be able to see th…

chia7712 commented on pull request #9102: URL: https://github.com/apache/kafka/pull/9102#issuecomment-666325552 ```EosBetaUpgradeIntegrationTest``` is flaky... retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mimaison commented on pull request #9007: KAFKA-10120: Deprecate DescribeLogDirsResult.all() and .values()

mimaison commented on pull request #9007: URL: https://github.com/apache/kafka/pull/9007#issuecomment-666353178 Failures look unrelated: - org.apache.kafka.streams.integration.EosBetaUpgradeIntegrationTest > shouldUpgradeFromEosAlphaToEosBeta[true] FAILED - org.apache.kafka.connect.mirror.MirrorConnectorsIntegrationTest > testOneWayReplicationWithAutorOffsetSync1 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mimaison merged pull request #9007: KAFKA-10120: Deprecate DescribeLogDirsResult.all() and .values()

mimaison merged pull request #9007: URL: https://github.com/apache/kafka/pull/9007 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (KAFKA-10120) DescribeLogDirsResult exposes internal classes

[

https://issues.apache.org/jira/browse/KAFKA-10120?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Mickael Maison resolved KAFKA-10120.

Fix Version/s: 2.7

Resolution: Fixed

> DescribeLogDirsResult exposes internal classes

> --

>

> Key: KAFKA-10120

> URL: https://issues.apache.org/jira/browse/KAFKA-10120

> Project: Kafka

> Issue Type: Bug

>Reporter: Tom Bentley

>Assignee: Tom Bentley

>Priority: Minor

> Fix For: 2.7

>

>

> DescribeLogDirsResult (returned by AdminClient#describeLogDirs(Collection))

> exposes a number of internal types:

> * {{DescribeLogDirsResponse.LogDirInfo}}

> * {{DescribeLogDirsResponse.ReplicaInfo}}

> * {{Errors}}

> {{}}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] mimaison commented on a change in pull request #9029: KAFKA-10255: Fix flaky testOneWayReplicationWithAutoOffsetSync test

mimaison commented on a change in pull request #9029:

URL: https://github.com/apache/kafka/pull/9029#discussion_r462989546

##

File path:

connect/mirror/src/test/java/org/apache/kafka/connect/mirror/MirrorConnectorsIntegrationTest.java

##

@@ -345,15 +342,24 @@ private void

waitForConsumerGroupOffsetSync(Consumer consumer, L

}, OFFSET_SYNC_DURATION_MS, "Consumer group offset sync is not

complete in time");

}

+private void waitForConsumingAllRecords(Consumer consumer)

throws InterruptedException {

+final AtomicInteger totalConsumedRecords = new AtomicInteger(0);

+waitForCondition(() -> {

+ConsumerRecords records = consumer.poll(Duration.ofMillis(500));

+consumer.commitSync();

+return NUM_RECORDS_PRODUCED ==

totalConsumedRecords.addAndGet(records.count());

+}, RECORD_CONSUME_DURATION_MS, "Consumer cannot consume all the

records in time");

+}

+

@Test

public void testOneWayReplicationWithAutoOffsetSync() throws

InterruptedException {

// create consumers before starting the connectors so we don't need to

wait for discovery

-Consumer consumer1 =

primary.kafka().createConsumerAndSubscribeTo(Collections.singletonMap(

-"group.id", "consumer-group-1"), "test-topic-1");

-consumer1.poll(Duration.ofMillis(500));

-consumer1.commitSync();

-consumer1.close();

+try (Consumer consumer1 =

primary.kafka().createConsumerAndSubscribeTo(Collections.singletonMap(

+"group.id", "consumer-group-1"), "test-topic-1")) {

+// we need to wait for consuming all the records for MM2

replicaing the expected offsets

Review comment:

`replicaing` -> `replicating`

##

File path:

connect/mirror/src/test/java/org/apache/kafka/connect/mirror/MirrorConnectorsIntegrationTest.java

##

@@ -345,15 +342,24 @@ private void

waitForConsumerGroupOffsetSync(Consumer consumer, L

}, OFFSET_SYNC_DURATION_MS, "Consumer group offset sync is not

complete in time");

}

+private void waitForConsumingAllRecords(Consumer consumer)

throws InterruptedException {

+final AtomicInteger totalConsumedRecords = new AtomicInteger(0);

+waitForCondition(() -> {

+ConsumerRecords records = consumer.poll(Duration.ofMillis(500));

Review comment:

Can we add the types `` to `ConsumerRecords`?

##

File path:

connect/mirror/src/test/java/org/apache/kafka/connect/mirror/MirrorConnectorsIntegrationTest.java

##

@@ -387,11 +393,11 @@ public void testOneWayReplicationWithAutoOffsetSync()

throws InterruptedExceptio

}

// create a consumer at primary cluster to consume the new topic

-consumer1 =

primary.kafka().createConsumerAndSubscribeTo(Collections.singletonMap(

-"group.id", "consumer-group-1"), "test-topic-2");

-consumer1.poll(Duration.ofMillis(500));

-consumer1.commitSync();

-consumer1.close();

+try (Consumer consumer1 =

primary.kafka().createConsumerAndSubscribeTo(Collections.singletonMap(

+"group.id", "consumer-group-1"), "test-topic-2")) {

+// we need to wait for consuming all the records for MM2

replicaing the expected offsets

Review comment:

`replicaing` -> `replicating`

##

File path:

connect/mirror/src/test/java/org/apache/kafka/connect/mirror/MirrorConnectorsIntegrationTest.java

##

@@ -345,15 +342,24 @@ private void

waitForConsumerGroupOffsetSync(Consumer consumer, L

}, OFFSET_SYNC_DURATION_MS, "Consumer group offset sync is not

complete in time");

}

+private void waitForConsumingAllRecords(Consumer consumer)

throws InterruptedException {

+final AtomicInteger totalConsumedRecords = new AtomicInteger(0);

+waitForCondition(() -> {

+ConsumerRecords records = consumer.poll(Duration.ofMillis(500));

+consumer.commitSync();

Review comment:

We can move that line after the `waitForCondition()` block to just

commit once all records have been consumed.

##

File path:

connect/mirror/src/test/java/org/apache/kafka/connect/mirror/MirrorConnectorsIntegrationTest.java

##

@@ -345,15 +342,24 @@ private void

waitForConsumerGroupOffsetSync(Consumer consumer, L

}, OFFSET_SYNC_DURATION_MS, "Consumer group offset sync is not

complete in time");

}

+private void waitForConsumingAllRecords(Consumer consumer)

throws InterruptedException {

+final AtomicInteger totalConsumedRecords = new AtomicInteger(0);

+waitForCondition(() -> {

+ConsumerRecords records = consumer.poll(Duration.ofMillis(500));

+consumer.commitSync();

+return NUM_RECORDS_PRODUCED ==

totalConsumedRecords.addAndGet(records.count());

+}, RECORD_CONSUME_DURATION_MS, "Consumer cannot consume all the

records in time");

Review comment:

nit: The

[jira] [Assigned] (KAFKA-10048) Possible data gap for a consumer after a failover when using MM2

[

https://issues.apache.org/jira/browse/KAFKA-10048?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Mickael Maison reassigned KAFKA-10048:

--

Assignee: Andre Araujo

> Possible data gap for a consumer after a failover when using MM2

>

>

> Key: KAFKA-10048

> URL: https://issues.apache.org/jira/browse/KAFKA-10048

> Project: Kafka

> Issue Type: Bug

> Components: mirrormaker

>Affects Versions: 2.5.0

>Reporter: Andre Araujo

>Assignee: Andre Araujo

>Priority: Major

>

> I've been looking at some MM2 scenarios and identified a situation where

> consumers can miss consuming some data in the even of a failover.

>

> When a consumer subscribes to a topic for the first time and commits offsets,

> the offsets for every existing partition of that topic will be saved to the

> cluster's {{__consumer_offset}} topic. Even if a partition is completely

> empty, the offset {{0}} will still be saved for the consumer's consumer group.

>

> When MM2 is replicating the checkpoints to the remote cluster, though, it

> [ignores anything that has an offset equals to

> zero|https://github.com/apache/kafka/blob/856e36651203b03bf9a6df2f2d85a356644cbce3/connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorCheckpointTask.java#L135],

> replicating offsets only for partitions that contain data.

>

> This can lead to a gap in the data consumed by consumers in the following

> scenario:

> # Topic is created on the source cluster.

> # MM2 is configured to replicate the topic and consumer groups

> # Producer starts to produce data to the source topic but for some reason

> some partitions do not get data initially, while others do (skewed keyed

> messages or bad luck)

> # Consumers start to consume data from that topic and their consumer groups'

> offsets are replicated to the target cluster, *but only for partitions that

> contain data*. The consumers are using the default setting auto.offset.reset

> = latest.

> # A consumer failover to the second cluster is performed (for whatever

> reason), and the offset translation steps are completed. The consumer are not

> restarted yet.

> # The producers continue to produce data to the source cluster topic and now

> produce data to the partitions that were empty before.

> # *After* the producers start producing data, consumers are started on the

> target cluster and start consuming.

> For the partitions that already had data before the failover, everything

> works fine. The consumer offsets will have been translated correctly and the

> consumers will start consuming from the correct position.

> For the partitions that were empty before the failover, though, any data

> written by the producers to those partitions *after the failover but before

> the consumers start* will be completely missed, since the consumers will jump

> straight to the latest offset when they start due to the lack of a zero

> offset stored locally on the target cluster.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Assigned] (KAFKA-10048) Possible data gap for a consumer after a failover when using MM2

[

https://issues.apache.org/jira/browse/KAFKA-10048?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Mickael Maison reassigned KAFKA-10048:

--

Assignee: (was: Mickael Maison)

> Possible data gap for a consumer after a failover when using MM2

>

>

> Key: KAFKA-10048

> URL: https://issues.apache.org/jira/browse/KAFKA-10048

> Project: Kafka

> Issue Type: Bug

> Components: mirrormaker

>Affects Versions: 2.5.0

>Reporter: Andre Araujo

>Priority: Major

>

> I've been looking at some MM2 scenarios and identified a situation where

> consumers can miss consuming some data in the even of a failover.

>

> When a consumer subscribes to a topic for the first time and commits offsets,

> the offsets for every existing partition of that topic will be saved to the

> cluster's {{__consumer_offset}} topic. Even if a partition is completely

> empty, the offset {{0}} will still be saved for the consumer's consumer group.

>

> When MM2 is replicating the checkpoints to the remote cluster, though, it

> [ignores anything that has an offset equals to

> zero|https://github.com/apache/kafka/blob/856e36651203b03bf9a6df2f2d85a356644cbce3/connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorCheckpointTask.java#L135],

> replicating offsets only for partitions that contain data.

>

> This can lead to a gap in the data consumed by consumers in the following

> scenario:

> # Topic is created on the source cluster.

> # MM2 is configured to replicate the topic and consumer groups

> # Producer starts to produce data to the source topic but for some reason

> some partitions do not get data initially, while others do (skewed keyed

> messages or bad luck)

> # Consumers start to consume data from that topic and their consumer groups'

> offsets are replicated to the target cluster, *but only for partitions that

> contain data*. The consumers are using the default setting auto.offset.reset

> = latest.

> # A consumer failover to the second cluster is performed (for whatever

> reason), and the offset translation steps are completed. The consumer are not

> restarted yet.

> # The producers continue to produce data to the source cluster topic and now

> produce data to the partitions that were empty before.

> # *After* the producers start producing data, consumers are started on the

> target cluster and start consuming.

> For the partitions that already had data before the failover, everything

> works fine. The consumer offsets will have been translated correctly and the

> consumers will start consuming from the correct position.

> For the partitions that were empty before the failover, though, any data

> written by the producers to those partitions *after the failover but before

> the consumers start* will be completely missed, since the consumers will jump

> straight to the latest offset when they start due to the lack of a zero

> offset stored locally on the target cluster.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Assigned] (KAFKA-10048) Possible data gap for a consumer after a failover when using MM2

[

https://issues.apache.org/jira/browse/KAFKA-10048?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Mickael Maison reassigned KAFKA-10048:

--

Assignee: Mickael Maison

> Possible data gap for a consumer after a failover when using MM2

>

>

> Key: KAFKA-10048

> URL: https://issues.apache.org/jira/browse/KAFKA-10048

> Project: Kafka

> Issue Type: Bug

> Components: mirrormaker

>Affects Versions: 2.5.0

>Reporter: Andre Araujo

>Assignee: Mickael Maison

>Priority: Major

>

> I've been looking at some MM2 scenarios and identified a situation where

> consumers can miss consuming some data in the even of a failover.

>

> When a consumer subscribes to a topic for the first time and commits offsets,

> the offsets for every existing partition of that topic will be saved to the

> cluster's {{__consumer_offset}} topic. Even if a partition is completely

> empty, the offset {{0}} will still be saved for the consumer's consumer group.

>

> When MM2 is replicating the checkpoints to the remote cluster, though, it

> [ignores anything that has an offset equals to

> zero|https://github.com/apache/kafka/blob/856e36651203b03bf9a6df2f2d85a356644cbce3/connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorCheckpointTask.java#L135],

> replicating offsets only for partitions that contain data.

>

> This can lead to a gap in the data consumed by consumers in the following

> scenario:

> # Topic is created on the source cluster.

> # MM2 is configured to replicate the topic and consumer groups

> # Producer starts to produce data to the source topic but for some reason

> some partitions do not get data initially, while others do (skewed keyed

> messages or bad luck)

> # Consumers start to consume data from that topic and their consumer groups'

> offsets are replicated to the target cluster, *but only for partitions that

> contain data*. The consumers are using the default setting auto.offset.reset

> = latest.

> # A consumer failover to the second cluster is performed (for whatever

> reason), and the offset translation steps are completed. The consumer are not

> restarted yet.

> # The producers continue to produce data to the source cluster topic and now

> produce data to the partitions that were empty before.

> # *After* the producers start producing data, consumers are started on the

> target cluster and start consuming.

> For the partitions that already had data before the failover, everything

> works fine. The consumer offsets will have been translated correctly and the

> consumers will start consuming from the correct position.

> For the partitions that were empty before the failover, though, any data

> written by the producers to those partitions *after the failover but before

> the consumers start* will be completely missed, since the consumers will jump

> straight to the latest offset when they start due to the lack of a zero

> offset stored locally on the target cluster.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] mumrah commented on pull request #9008: KAFKA-9629 Use generated protocol for Fetch API

mumrah commented on pull request #9008: URL: https://github.com/apache/kafka/pull/9008#issuecomment-666374043 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] lct45 commented on a change in pull request #9039: KAFKA-5636: SlidingWindows (KIP-450)

lct45 commented on a change in pull request #9039:

URL: https://github.com/apache/kafka/pull/9039#discussion_r463026843

##

File path:

streams/src/main/java/org/apache/kafka/streams/kstream/internals/CogroupedStreamAggregateBuilder.java

##

@@ -132,16 +135,19 @@

final boolean stateCreated,

final StoreBuilder storeBuilder,

final Windows windows,

+

final SlidingWindows slidingWindows,

final SessionWindows sessionWindows,

final Merger sessionMerger) {

final ProcessorSupplier kStreamAggregate;

-if (windows == null && sessionWindows == null) {

+if (windows == null && slidingWindows == null && sessionWindows ==

null) {

kStreamAggregate = new KStreamAggregate<>(storeBuilder.name(),

initializer, aggregator);

-} else if (windows != null && sessionWindows == null) {

+} else if (windows != null && slidingWindows == null && sessionWindows

== null) {

kStreamAggregate = new KStreamWindowAggregate<>(windows,

storeBuilder.name(), initializer, aggregator);

-} else if (windows == null && sessionMerger != null) {

+} else if (windows == null && slidingWindows != null && sessionWindows

== null) {

+kStreamAggregate = new