[

https://issues.apache.org/jira/browse/HIVE-26046?focusedWorklogId=804617&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-804617

]

ASF GitHub Bot logged work on HIVE-26046:

-----------------------------------------

Author: ASF GitHub Bot

Created on: 30/Aug/22 03:12

Start Date: 30/Aug/22 03:12

Worklog Time Spent: 10m

Work Description: zhangbutao commented on code in PR #3276:

URL: https://github.com/apache/hive/pull/3276#discussion_r957963875

##########

standalone-metastore/metastore-server/src/main/java/org/apache/hadoop/hive/metastore/dataconnector/jdbc/MySQLConnectorProvider.java:

##########

@@ -90,10 +90,20 @@ protected String getDataType(String dbDataType, int size) {

// map any db specific types here.

switch (dbDataType.toLowerCase())

{

+ case "bit":

+ return toHiveBitType(size);

default:

mappedType = ColumnType.VOID_TYPE_NAME;

break;

}

return mappedType;

}

+

+ private String toHiveBitType(int size) {

+ if (size <= 1) {

+ return ColumnType.BOOLEAN_TYPE_NAME;

+ } else {

+ return ColumnType.BIGINT_TYPE_NAME;

Review Comment:

@nrg4878 Another alternative is the one I mentioned earlier, that is we use

hive's interal udf bin() to convert the bigint values to bit type. This one

needs users to be aware of hive's bin() udf:

Step to test:

1. Create jdbc-mapping table in hive. Use hive's bigint to map MySQL's bit

datatype:

` CREATE EXTERNAL TABLE jdbc_testmysqlbit_use_bigint`

`(`

` id bigint`

`)`

`STORED BY 'org.apache.hive.storage.jdbc.JdbcStorageHandler'`

`TBLPROPERTIES (`

`"hive.sql.database.type" = "MYSQL",`

`"hive.sql.jdbc.driver" = "com.mysql.jdbc.Driver",`

`"hive.sql.jdbc.url" = "jdbc:mysql://localhost:3306/testdb",`

`"hive.sql.dbcp.username" = "user",`

`"hive.sql.dbcp.password" = "passwd",`

`"hive.sql.table" = "testmysqlbit",`

`"hive.sql.dbcp.maxActive" = "1"`

`);`

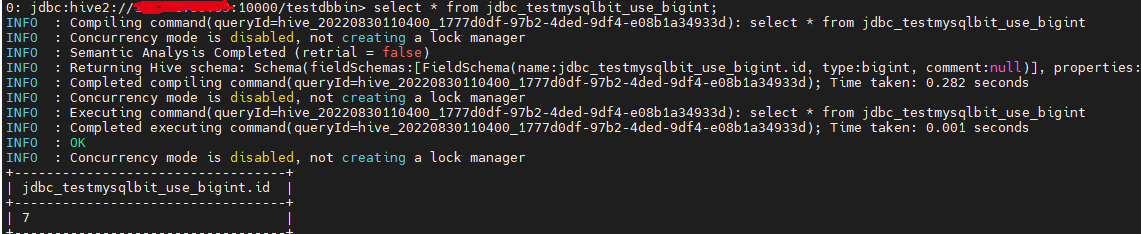

2. `select * from jdbc_testmysqlbit_use_bigint;` using hive beeline:

3. `select bin(id) from jdbc_testmysqlbit_use_bigint;` using hive beeline:

Issue Time Tracking

-------------------

Worklog Id: (was: 804617)

Time Spent: 4h 10m (was: 4h)

> MySQL's bit datatype is default to void datatype in hive

> --------------------------------------------------------

>

> Key: HIVE-26046

> URL: https://issues.apache.org/jira/browse/HIVE-26046

> Project: Hive

> Issue Type: Sub-task

> Components: Standalone Metastore

> Affects Versions: 4.0.0

> Reporter: Naveen Gangam

> Assignee: zhangbutao

> Priority: Major

> Labels: pull-request-available

> Time Spent: 4h 10m

> Remaining Estimate: 0h

>

> describe on a table that contains a "bit" datatype gets mapped to void. We

> need a explicit conversion logic in the MySQL ConnectorProvider to map it to

> a suitable datatype in hive.

> {noformat}

> +-------------------------------+---------------------------------------------------+----------------------------------------------------+

> | col_name | data_type

> | comment |

> +-------------------------------+---------------------------------------------------+----------------------------------------------------+

> | tbl_id | bigint

> | from deserializer |

> | create_time | int

> | from deserializer |

> | db_id | bigint

> | from deserializer |

> | last_access_time | int

> | from deserializer |

> | owner | varchar(767)

> | from deserializer |

> | owner_type | varchar(10)

> | from deserializer |

> | retention | int

> | from deserializer |

> | sd_id | bigint

> | from deserializer |

> | tbl_name | varchar(256)

> | from deserializer |

> | tbl_type | varchar(128)

> | from deserializer |

> | view_expanded_text | string

> | from deserializer |

> | view_original_text | string

> | from deserializer |

> | is_rewrite_enabled | void

> | from deserializer |

> | write_id | bigint

> | from deserializer

> {noformat}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)