[

https://issues.apache.org/jira/browse/HIVE-21218?focusedWorklogId=399394&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-399394

]

ASF GitHub Bot logged work on HIVE-21218:

-----------------------------------------

Author: ASF GitHub Bot

Created on: 06/Mar/20 22:17

Start Date: 06/Mar/20 22:17

Worklog Time Spent: 10m

Work Description: davidov541 commented on issue #933: HIVE-21218: Adding

support for Confluent Kafka Avro message format

URL: https://github.com/apache/hive/pull/933#issuecomment-595988456

OK, I was able to successfully test this build using a Confluent single-node

cluster and a Hive pseudo-standalone cluster. I was able to create a topic with

a simple Avro schema and a few records, and then read that from Hive

successfully.

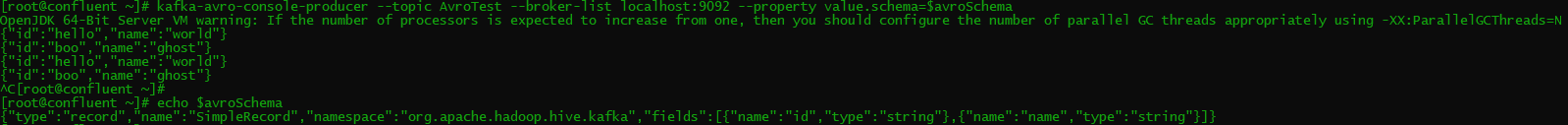

Confluent Cluster Production:

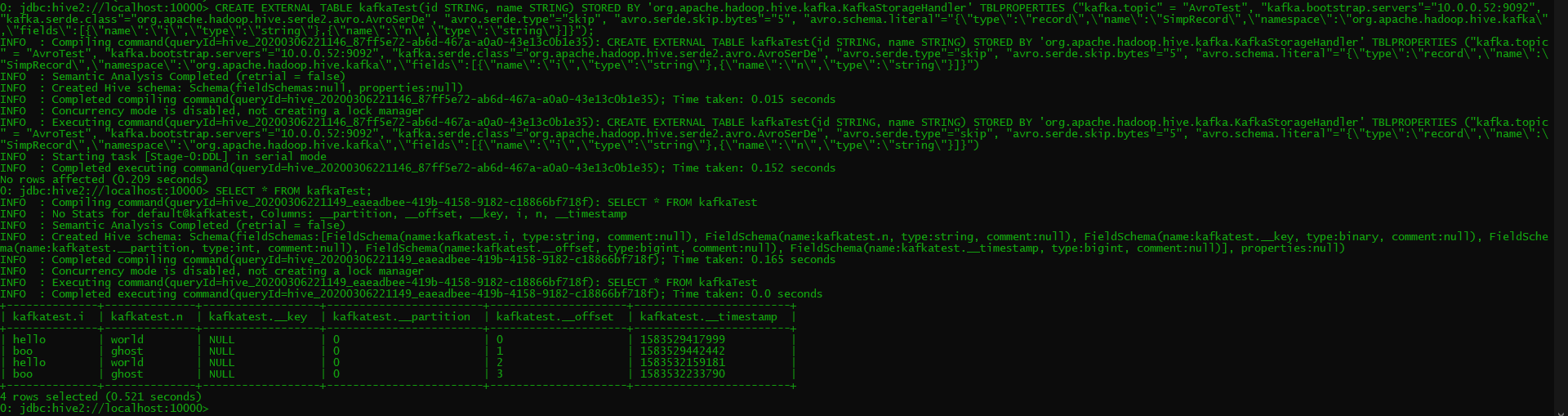

Hive Table Creation and Querying:

One thing I noticed was that on the Hive side, if I used the exact same

schema as the SimpleRecord schema which we use for testing, I got the following

error.

```

2020-03-06T22:05:23,739 WARN [HiveServer2-Handler-Pool: Thread-165]

thrift.ThriftCLIService: Error fetching results:

org.apache.hive.service.cli.HiveSQLException: java.io.IOException:

java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to

java.lang.String

at

org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:481)

~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hive.service.cli.operation.OperationManager.getOperationNextRowSet(OperationManager.java:331)

~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hive.service.cli.session.HiveSessionImpl.fetchResults(HiveSessionImpl.java:946)

~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hive.service.cli.CLIService.fetchResults(CLIService.java:567)

~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hive.service.cli.thrift.ThriftCLIService.FetchResults(ThriftCLIService.java:801)

~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hive.service.rpc.thrift.TCLIService$Processor$FetchResults.getResult(TCLIService.java:1837)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hive.service.rpc.thrift.TCLIService$Processor$FetchResults.getResult(TCLIService.java:1822)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56)

~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

~[?:1.8.0_242]

at

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

~[?:1.8.0_242]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_242]

Caused by: java.io.IOException: java.lang.ClassCastException:

org.apache.avro.util.Utf8 cannot be cast to java.lang.String

at

org.apache.hadoop.hive.ql.exec.FetchOperator.getNextRow(FetchOperator.java:638)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.ql.exec.FetchOperator.pushRow(FetchOperator.java:545)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.ql.exec.FetchTask.fetch(FetchTask.java:150)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.Driver.getResults(Driver.java:880)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.ql.reexec.ReExecDriver.getResults(ReExecDriver.java:241)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:476)

~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

... 13 more

Caused by: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be

cast to java.lang.String

at

org.apache.hadoop.hive.kafka.SimpleRecord.put(SimpleRecord.java:88)

~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.avro.generic.GenericData.setField(GenericData.java:690)

~[avro-1.8.2.jar:1.8.2]

at

org.apache.avro.specific.SpecificDatumReader.readField(SpecificDatumReader.java:119)

~[avro-1.8.2.jar:1.8.2]

at

org.apache.avro.generic.GenericDatumReader.readRecord(GenericDatumReader.java:222)

~[avro-1.8.2.jar:1.8.2]

at

org.apache.avro.generic.GenericDatumReader.readWithoutConversion(GenericDatumReader.java:175)

~[avro-1.8.2.jar:1.8.2]

at

org.apache.avro.generic.GenericDatumReader.read(GenericDatumReader.java:153)

~[avro-1.8.2.jar:1.8.2]

at

org.apache.avro.generic.GenericDatumReader.read(GenericDatumReader.java:145)

~[avro-1.8.2.jar:1.8.2]

at

org.apache.hadoop.hive.kafka.KafkaSerDe$AvroBytesConverter.getWritable(KafkaSerDe.java:401)

~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.kafka.KafkaSerDe$AvroBytesConverter.getWritable(KafkaSerDe.java:367)

~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.kafka.KafkaSerDe.deserializeKWritable(KafkaSerDe.java:250)

~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.kafka.KafkaSerDe.deserialize(KafkaSerDe.java:238)

~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.ql.exec.FetchOperator.getNextRow(FetchOperator.java:619)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.ql.exec.FetchOperator.pushRow(FetchOperator.java:545)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.ql.exec.FetchTask.fetch(FetchTask.java:150)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.Driver.getResults(Driver.java:880)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hadoop.hive.ql.reexec.ReExecDriver.getResults(ReExecDriver.java:241)

~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at

org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:476)

~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

... 13 more

```

It concerns me that an Avro schema we use for testing is being included in a

final build, and also that using it in the Hive table gives this error. I think

this is likely a separate issue, but I wanted to pass it by you (@b-slim) first

before filing a separate JIRA and ignoring it for now.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

-------------------

Worklog Id: (was: 399394)

Time Spent: 13h 10m (was: 13h)

> KafkaSerDe doesn't support topics created via Confluent Avro serializer

> -----------------------------------------------------------------------

>

> Key: HIVE-21218

> URL: https://issues.apache.org/jira/browse/HIVE-21218

> Project: Hive

> Issue Type: Bug

> Components: kafka integration, Serializers/Deserializers

> Affects Versions: 3.1.1

> Reporter: Milan Baran

> Assignee: David McGinnis

> Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-21218.2.patch, HIVE-21218.3.patch,

> HIVE-21218.4.patch, HIVE-21218.5.patch, HIVE-21218.6.patch,

> HIVE-21218.7.patch, HIVE-21218.patch

>

> Time Spent: 13h 10m

> Remaining Estimate: 0h

>

> According to [Google

> groups|https://groups.google.com/forum/#!topic/confluent-platform/JYhlXN0u9_A]

> the Confluent avro serialzier uses propertiary format for kafka value -

> <magic_byte 0x00><4 bytes of schema ID><regular avro bytes for object that

> conforms to schema>.

> This format does not cause any problem for Confluent kafka deserializer which

> respect the format however for hive kafka handler its bit a problem to

> correctly deserialize kafka value, because Hive uses custom deserializer from

> bytes to objects and ignores kafka consumer ser/deser classes provided via

> table property.

> It would be nice to support Confluent format with magic byte.

> Also it would be great to support Schema registry as well.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)