Igor-Misic opened a new pull request, #8496: URL: https://github.com/apache/nuttx/pull/8496

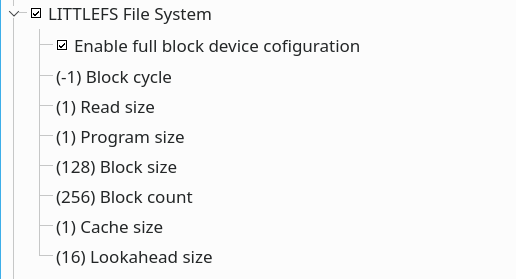

## Summary This adds full control over the LittleFS granularity configuration. For default values, I've used them from LittleFS [README.md](https://github.com/littlefs-project/littlefs/blob/master/README.md?plain=1#L49-L56) I left the block_cycles to 200 (in the README.md is 500). To demonstrate the usefulness of this additional configuration, I'll give two examples. One with 256 Kb (32 KiB) FRAM and the other with 1Gb (125 KiB) Winbound W25N01GV (**I can't find drivers in NuttX** yet but it will serve as an example because I have experience with it and LittleFS. ) LittleFS is is a block-based filesystem with two steps of granularity. First is logical block size defined by block sizes. The second is read/program granularity defined by read/program size. reference: https://github.com/littlefs-project/littlefs/blob/master/SPEC.md?plain=1#L20-L29 The smallest recommenced block size required for LittleFS is 128 bytes (see: https://github.com/littlefs-project/littlefs/issues/264#issuecomment-519963153). #### (32 KiB) FRAM FRAM has 1-byte granularity and can work as a single big page/block. To full fill LittleFS's design as a block-based filesystem we need to divide FRAM into small sectors. For 32 KiB FRAM that is 256 blocks with 128-byte sizes. Knowing this we can enable 1-byte granularity for FRAM. ``` CONFIG_RAMTRON_EMULATE_PAGE_SHIFT=0 CONFIG_RAMTRON_EMULATE_SECTOR_SHIFT=0 ``` Then set the next configuration for LittleFS: ``` CONFIG_FS_LITTLEFS_ADVANCED_BLOCK_DEVICE_CFG=y // Enable full control CONFIG_FS_LITTLEFS_BLOCK_COUNT=256 // 32768/128 = 256 blocks with 128-byte sizes CONFIG_FS_LITTLEFS_BLOCK_CYCLE=-1 // High Endurance 1 Trillion (10^12 ) Read/Writes CONFIG_FS_LITTLEFS_BLOCK_SIZE=128 //Minimum required block size CONFIG_FS_LITTLEFS_CACHE_SIZE=1 //NoDelay™ Writes CONFIG_FS_LITTLEFS_LOOKAHEAD_SIZE=16 // enough for tracking 128 blocks of 256 max CONFIG_FS_LITTLEFS_PROGRAM_SIZE=1 //1-byte program granularity with direct write to SPI CONFIG_FS_LITTLEFS_READ_SIZE=1 // 1-byte read granularity with direct read from SPI ```  This was not achievable without this PR. When FRAM is set to 1-byte granularity MTD block device will report a block size of 32768 bytes and 1 block count. This is not enough blocks for LittleFS to work. #### W25N01GV This specific **NAND** Flash memory has a limitation that needs to be addressed before being used with LittleFS. The page size of W25N01GV is 2048 bytes, but it is limited to only 4 writes before it needs to be erased. Reference: https://igor-misic.blogspot.com/2020/12/winbond-nand-flash-w25n01gv-problem.html So knowing this limitation we can manually setup the LittleFS configuration: ``` CONFIG_FS_LITTLEFS_ADVANCED_BLOCK_DEVICE_CFG=y // Enable full control CONFIG_FS_LITTLEFS_BLOCK_COUNT=1024 // Blocks per DIE CONFIG_FS_LITTLEFS_BLOCK_CYCLE=500 CONFIG_FS_LITTLEFS_BLOCK_SIZE=131072 //64 * 2048 (page size * pages per block) CONFIG_FS_LITTLEFS_CACHE_SIZE=512 CONFIG_FS_LITTLEFS_LOOKAHEAD_SIZE=512 // enough for tracking 4096 blocks CONFIG_FS_LITTLEFS_PROGRAM_SIZE=512 // 2048/4=512 (1 page is 2048 bytes) Limited by a number of 4 allowed writings at the same W25N01GV page CONFIG_FS_LITTLEFS_READ_SIZE=512 ``` This way we are able to use the same page with 4 times smaller granularity than what would be possible before this change. For **NOR** flashes (W25Q), granularity can be even smaller because they don't suffer from the limitations that NAND Flash has, but I didn't test it yet. ## Impact Doesn't have any negative impact on the existing project. It just extends capabilities. ## Testing I am hammering the FRAM configuration from above with the BSON file for a couple of days now. I'll report here if I detect any issues. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@nuttx.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org