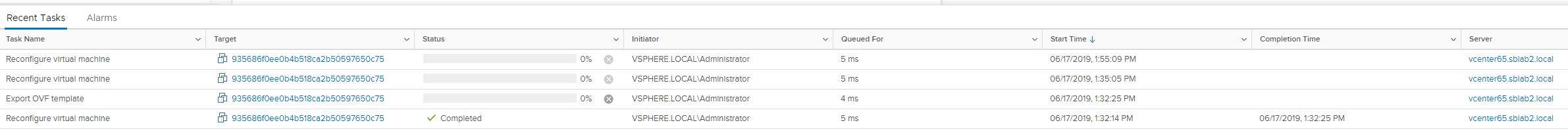

andrijapanicsb opened a new issue #3408: VMware Worker VM doesn't get recycled after a configured timeout URL: https://github.com/apache/cloudstack/issues/3408 <!-- Verify first that your issue/request is not already reported on GitHub. Also test if the latest release and master branch are affected too. Always add information AFTER of these HTML comments, but no need to delete the comments. --> ##### ISSUE TYPE <!-- Pick one below and delete the rest --> * Bug Report * Improvement Request * Enhancement Request * Feature Idea * Documentation Report * Other ##### COMPONENT NAME <!-- Categorize the issue, e.g. API, VR, VPN, UI, etc. --> ~~~ API ~~~ ##### CLOUDSTACK VERSION <!-- New line separated list of affected versions, commit ID for issues on master branch. --> ~~~ 4.11.2 (didn't test previous ones) ~~~ ##### CONFIGURATION <!-- Information about the configuration if relevant, e.g. basic network, advanced networking, etc. N/A otherwise --> VMware 6.5u2 (should be irelevant) ##### OS / ENVIRONMENT <!-- Information about the environment if relevant, N/A otherwise --> mgmt = CentOS 7 (irelevant) ##### SUMMARY <!-- Explain the problem/feature briefly --> Temp VMware worker VM used to export volume snapshot is not being recycled (i.e. removed) after the timeout is reached (and when configured to be removed( ##### STEPS TO REPRODUCE <!-- For bugs, show exactly how to reproduce the problem, using a minimal test-case. Use Screenshots if accurate. For new features, show how the feature would be used. --> <!-- Paste example playbooks or commands between quotes below --> S~~~ Set following global settings: - job.expire.minutes : 1 - job.cancel.threshold.minutes : 1 vmware.clean.old.worker.vms : true Create a volume snapshot. After 2 x ( job.expire.minutes + job.cancel.threshold.minutes) = 240 sec, the job will time out / fail with the following message in logs: 2019-06-17 11:35:26,459 INFO [c.c.h.v.m.VmwareManagerImpl] (DirectAgentCronJob-40:ctx-dbfaf1a2) (logid:bff5a57c) *Worker VM expired, seconds elapsed: 252* 2019-06-17 11:35:26,463 INFO [c.c.h.v.r.VmwareResource] (DirectAgentCronJob-40:ctx-dbfaf1a2) (logid:bff5a57c) *Recycle pending worker VM: 935686f0ee0b4b518ca2b50597650c75* but tasks remain on the vCenter side:  The way to "clean it manually" is to stop/kill the OVF export task in vCenter, after which the VM reconfiguration task and finally removal task (not visible on the image) will be executed and worker VM will be removed as per the expected behavior. Issues seems, that we are NOT killing to OVF export task once the timeouts are reached in ACS, which is the required step. ~~~ <!-- You can also paste gist.github.com links for larger files --> ##### EXPECTED RESULTS <!-- What did you expect to happen when running the steps above? --> ~~~ OVF task is stopped/killed and worker VM get's removed. ~~~ ##### ACTUAL RESULTS <!-- What actually happened? --> <!-- Paste verbatim command output between quotes below --> ~~~ Worker VM keeps running, as well as the OVF export task. ~~~

---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services